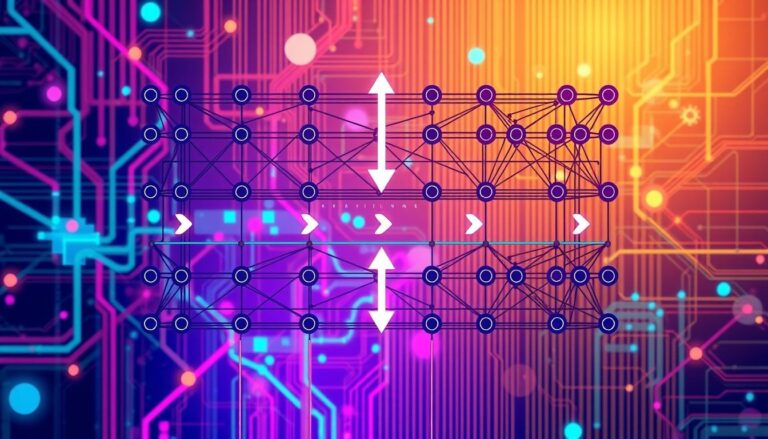

How Do Recurrent Neural Networks (RNNs) Work in Sequence Prediction?

In the world of machine learning and artificial intelligence, Recurrent Neural Networks (RNNs) are key. They handle sequential data like time series, text, and speech. Unlike regular neural networks, RNNs can process data step by step. This helps them understand the order of data, making them great for predicting sequences. RNNs use their internal memory,…