What is the purpose of activation functions in neural networks?

Activation functions are key in neural networks, especially in deep learning. They add non-linearity to the network. This lets it learn and show complex patterns in data. Without them, a neural network would just be like a simple linear model, no matter how many layers it has.

The activation function takes the weighted sum of a neuron’s inputs. Then, it applies a non-linear transformation. This transformation is what lets neural networks find complex relationships. They can solve tough problems in computer vision, natural language processing, and predictive analytics.

Activation functions bring non-linearity to neural networks. This makes them great for solving real-world problems in machine learning and artificial intelligence. These non-linear changes are also vital for training deep neural networks using backpropagation.

Understanding Neural Networks

Neural networks are key in Machine Learning and Artificial Intelligence. They mimic the brain’s structure and function. These networks have nodes that work together to process data. This lets machines learn and predict with great accuracy.

Elements of a Neural Network

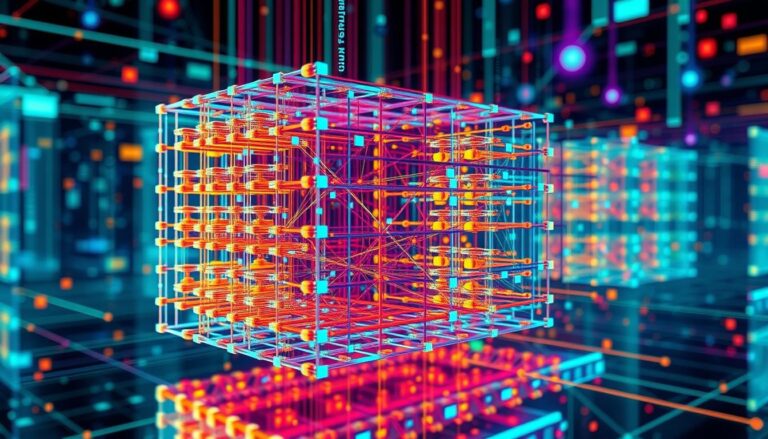

A neural network has an input layer, hidden layers, and an output layer. The input layer gets the data. Then, the hidden layers do complex work on it. Finally, the output layer gives the prediction or classification.

This flow from input to output is called feedforward propagation. Neural networks also use backpropagation. Backpropagation helps adjust the network’s settings to get closer to the right answers. This is key for Supervised Learning, helping the network get better over time.

| Key Element | Description |

|---|---|

| Input Layer | Receives the input data to be processed by the network. |

| Hidden Layer(s) | Performs complex computations and transformations on the data. |

| Output Layer | Provides the final prediction or classification result. |

| Feedforward Propagation | The forward flow of information from input to output. |

| Backpropagation | The process of adjusting the network’s weights and biases to minimize the difference between actual and desired outputs. |

Why Do We Need Non-linear Activation Functions?

Neural networks without activation functions act like simple linear models, no matter the layer count. The activation function brings non-linearity. This lets the network learn and show complex data patterns.

Mathematical Proof

Math shows that combining two linear functions still results in a linear function. So, a network with only linear functions can’t learn non-linear patterns. The activation function is key for the backpropagation algorithm. It helps update the network’s weights and biases.

A Stack Overflow question from 12 years ago has been seen over 131,000 times. It got 10 votes. It highlights the importance of activation functions in neural networks. They’re vital for modeling non-linear relationships between variables.

Pattern Recognition and Machine Learning by Christopher M. Bishop explains how squashing functions help. They let multilayer networks approximate any function well. Without non-linear functions, networks are like Logistic Regression, unable to learn complex patterns.

In short, non-linear activation functions are crucial. They help neural networks learn and show complex data patterns. This lets them outperform simple models and accurately approximate many functions.

Linear Activation Function

In the world of neural networks and machine learning, the linear activation function is key. It’s also called the “identity function.” It just passes the input to the output without changing it. This simplicity might seem good, but it has big limits in artificial intelligence and deep learning.

The linear activation function can output values from -infinity to +infinity. This means it can’t keep values within a certain range. It also can’t capture the complex, non-linear relationships in data well. Plus, its constant derivative makes it bad for the backpropagation algorithm, a key training method for neural networks.

One big reason the linear function isn’t used in hidden layers is that it makes all layers act like one. This means a multi-layer network can’t learn and find the complex patterns in data. It’s like trying to make a multi-layer cake that just tastes like one layer.

On the other hand, non-linear functions like sigmoid, tanh, and ReLU work better in hidden layers. They let the network learn and show complex relationships. This helps the network make more accurate predictions and classifications in many machine learning and artificial intelligence tasks.

| Activation Function | Range | Suitable Applications |

|---|---|---|

| Linear | (-∞, ∞) | Output layer, when the target variable is continuous |

| Sigmoid/Logistic | (0, 1) | Binary classification, predicting probabilities |

| Tanh | (-1, 1) | Hidden layers, to center the data around zero |

| ReLU | [0, ∞) | Hidden layers, in convolutional neural networks |

Deep Learning: Non-Linear Activation Functions

In neural networks and deep learning, choosing the right activation functions is key. Linear activation functions have their place, but non-linear activation functions are vital for solving complex problems.

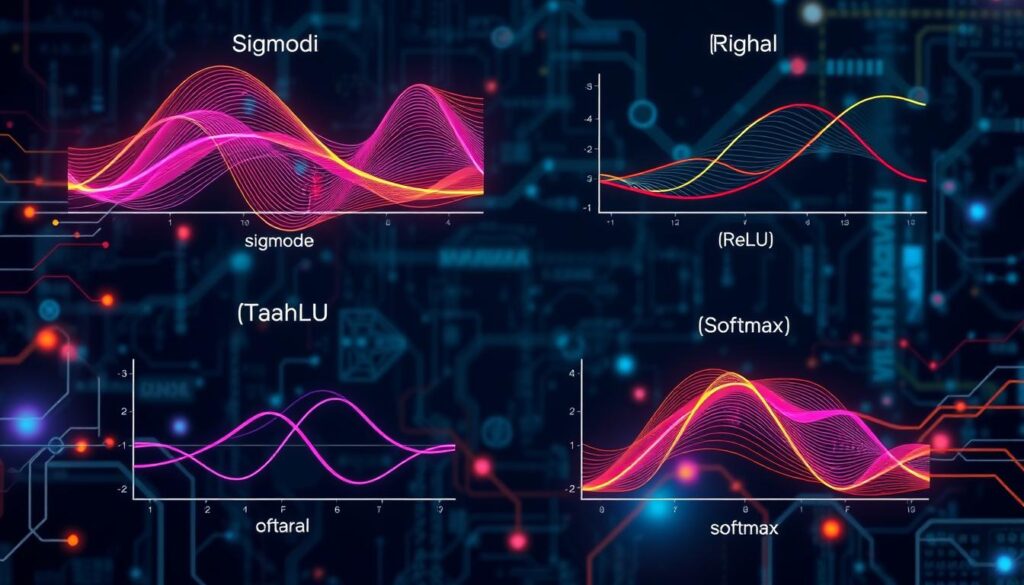

Sigmoid/Logistic Function

The Sigmoid/Logistic function is a top choice for non-linear activation. It turns any input into a value between 0 and 1. This is great for binary classification problems where you need to show the probability of a class.

This function adds non-linearity to the network. It lets the network learn complex data relationships.

Tanh Function

The Tanh (Hyperbolic Tangent) function is another favorite. It maps inputs to -1 to 1. This non-linearity helps the network learn complex patterns, key for deep learning.

Both Sigmoid and Tanh are vital for the backpropagation algorithm. This algorithm is essential for training deep learning models. These functions introduce non-linearity, enabling the network to grasp complex data relationships. This leads to better performance in many machine learning and artificial intelligence tasks.

Rectified Linear Unit (ReLU)

The Rectified Linear Unit (ReLU) is a key non-linear activation function in deep learning. It has gained popularity for training complex neural networks in machine learning and artificial intelligence. This function is widely used today.

ReLU outputs the input value if it’s positive and 0 if it’s negative. This non-linear behavior helps the neural network learn complex data relationships. It’s faster to train than other functions like Sigmoid and Tanh. Also, ReLU’s sparse activation makes neural networks more efficient and compact.

Some key advantages of the ReLU activation function include:

- Computational Efficiency: ReLU is simple to compute, making it less intensive than other functions.

- Gradient Preservation: ReLU helps avoid the vanishing gradient problem, improving backpropagation in deep networks.

- Sparsity: Its sparse activation leads to more efficient and compact neural networks.

- Unbounded Output: ReLU’s positive output is useful when preserving activation value magnitudes is important.

While ReLU is the go-to for many deep learning tasks, it faces challenges. The “dying ReLU” problem and its non-zero-centered output can make training tricky. To solve these, variants like Leaky ReLU, Parametric ReLU (PReLU), and Exponential Linear Unit (ELU) have been created.

In summary, ReLU is a crucial non-linear activation function in deep learning. Its efficiency, gradient preservation, and sparse activation patterns make it a top choice for many neural networks in machine learning and artificial intelligence.

Conclusion

Activation functions are key to neural networks’ success. They let networks learn and understand complex data patterns. This is because they introduce non-linearity, helping networks go beyond simple linear models.

This is crucial for tackling tough challenges in many fields. These include computer vision, natural language processing, and predictive analytics.

Knowing how different activation functions work is essential. This includes Sigmoid, Tanh, and ReLU. It helps in creating and training deep learning models.

These models have led to big leaps in Artificial Intelligence. AI is now used in healthcare, finance, and smart cities.

Understanding neural networks and activation functions is vital. It helps us see what AI can do and its limits. This knowledge is important for developing AI responsibly and ethically.