What is overfitting and how can it be avoided in machine learning models?

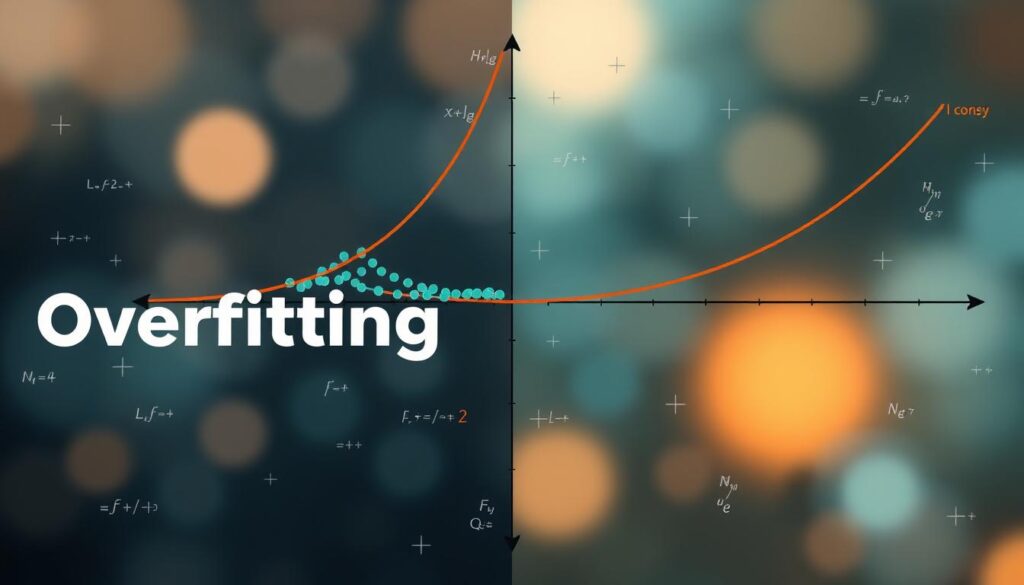

In the world of Machine Learning, Neural Networks, Deep Learning, and Artificial Intelligence, overfitting is a big challenge. It happens when a model learns the training data too well. It picks up the noise and random changes in the data.

This makes the model not work well with new data. It’s like trying to guess a password based on a few hints, but the hints are wrong.

Overfitting can really mess up a model’s performance. It makes predictions less reliable and more prone to changes in data. Finding the right balance between bias and variance is key to making models that work well with new data.

Beating overfitting is essential for making reliable machine learning models. This is true for tasks like Natural Language Processing, Computer Vision, and Predictive Analytics. By understanding what causes overfitting and how to fix it, data scientists can make models that give accurate predictions on new data.

Understanding Overfitting in Machine Learning

In machine learning, overfitting happens when a model learns the training data too well. It picks up not just the patterns but also the noise. This makes it hard for the model to work well with new data. It’s key to understand overfitting to make strong machine learning models.

What is Overfitting?

Overfitting is when a machine learning model is too complex. It captures the data’s noise along with the patterns. This balance problem makes the model do great on the training data but fail with new data.

Consequences of Overfitting

The main issue with overfitting is that the model can’t generalize well. It loses predictive power on new data, making specific predictions based on training set flaws. These models are also less resilient and more prone to changes in data. They might memorize the training data instead of learning general patterns, making them less useful in real-world scenarios.

Why Does Overfitting Occur?

Overfitting is a big problem in machine learning models. It happens because of how complex the model is, the quality of the data, and how it learns. Several key factors can lead to overfitting:

- Model Complexity: A model that’s too complex for the data it has might just remember the data. It won’t learn the real patterns, leading to bad performance on new data.

- Inadequate Data: If there’s not enough data, the model can’t learn the true data patterns. This leads to overfitting.

- Noisy Data: Data with outliers or useless information can make the model focus on these. This causes overfitting.

- Lack of Regularization: Using the right regularization techniques is key. They help keep the model simple and prevent overfitting.

- Feature Engineering Issues: Using features that don’t matter or not picking the best ones can also cause overfitting.

These issues make the model remember the training data instead of learning the real patterns. This results in poor performance on new data. It’s important to understand and fix these problems to make machine learning models better.

| Cause of Overfitting | Explanation |

|---|---|

| Model Complexity | A model that’s too complex for the data it has might just remember the data. It won’t learn the real patterns, leading to bad performance on new data. |

| Inadequate Data | If there’s not enough data, the model can’t learn the true data patterns. This leads to overfitting. |

| Noisy Data | Data with outliers or useless information can make the model focus on these. This causes overfitting. |

| Lack of Regularization | Using the right regularization techniques is key. They help keep the model simple and prevent overfitting. |

| Feature Engineering Issues | Using features that don’t matter or not picking the best ones can also cause overfitting. |

Detecting Overfitting in Machine Learning

Spotting and fixing overfitting is key to making strong machine learning models. This method splits the data into parts, trains the model on some, and checks its work on the rest. It shows if the model can handle new data well.

Cross-Validation

In k-fold cross-validation, the data is split into k parts. The model is trained k times, using k-1 parts for training and the last part for checking. This makes the model’s performance more reliable and shows if it can handle new data.

Train-Test Split

The train-test split method also helps find overfitting. It divides the data into two parts: one for training and one for testing. If the model does much better on the training data than the test data, it’s likely overfitting.

Using these methods, machine learning experts can spot and fix overfitting. This makes sure their models can make good predictions on data they haven’t seen before.

Preventing Overfitting in Machine Learning

Building accurate machine learning models is a big challenge. Overfitting happens when a model learns too much from the training data. This makes it fail on new data. To avoid this, experts use several important techniques.

Regularization Techniques

Regularization helps by adding a penalty to features that don’t help much. L1 regularization (Lasso) uses the absolute values of coefficients. This makes the model focus on key features and ignore the rest. L2 regularization (Ridge) adds squared values to the loss function. It helps keep feature importance balanced.

Data Augmentation

Data augmentation is another great way to stop overfitting. It creates new training samples by changing the existing data. For example, it can rotate or flip images. This makes the model better at handling different inputs, reducing overfitting.

Ensemble Methods

Ensemble learning is a strong method against overfitting. Ensemble methods use many models together to improve performance and reduce overfitting. Bagging and boosting are two common techniques. Bagging uses many strong learners in parallel. Boosting uses a series of weak learners to increase the model’s complexity.

Using these techniques together helps machine learning experts. They can make models that work well on new data.

Machine Learning and Overfitting on AWS

Machine learning faces a big challenge: overfitting. Luckily, Amazon Web Services (AWS) has tools to tackle this issue. Amazon SageMaker, a top machine learning service, spots overfitting during training.

Amazon SageMaker checks data from training, like inputs and outputs. It alerts users about overfitting and other accuracy problems. This helps machine learning experts improve their models.

Amazon SageMaker Model Training stops training when accuracy is reached. This prevents overfitting and saves time and money. It gives users quick insights into model performance.

In short, AWS offers tools to fight overfitting in machine learning. With Amazon SageMaker, teams can train models faster and cheaper. This leads to more accurate and reliable machine learning solutions.

Conclusion

Overfitting is a big problem in Machine Learning. It can make models less reliable and less effective. To tackle this, it’s key to understand bias, variance, and the balance between them.

Using methods like cross-validation, regularization, data augmentation, and ensemble methods helps. These strategies help models work well with new data.

Cloud-based Machine Learning platforms like AWS offer tools to fight overfitting. They make it easier to develop and use models. Learning how to avoid overfitting is crucial for making models that work well.

As Machine Learning grows, it’s important for researchers to be open and active. They should share their findings clearly and helpfully. This way, Machine Learning can help society and bring about new opportunities.