What is overfitting and how can it be avoided in machine learning models?

In the world of Machine Learning, Natural Language Processing, Deep Learning, and Artificial Intelligence, overfitting is a big problem. It happens when a model learns the training data too well. It picks up the noise and fluctuations in the data, not just the patterns.

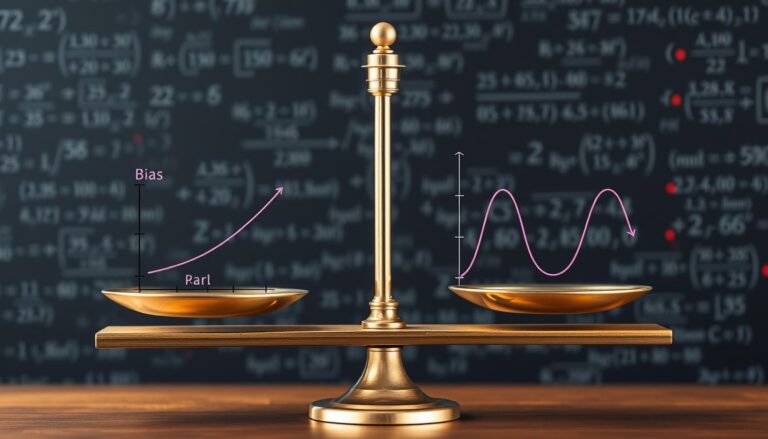

This makes the model bad at predicting new data. It leads to wrong predictions and less power in making predictions. It’s important to find the right balance between bias and variance to make good models.

Understanding Overfitting in Machine Learning

In machine learning, overfitting is a big challenge. It happens when a model learns the training data too well. It picks up the noise and random changes in the data, not just the real patterns.

This makes the model great at predicting on the training data. But it fails when faced with new, unseen data. This is because it’s too focused on the training data’s details.

What is Overfitting?

Overfitting comes from the mix of bias and variance. High bias means the model is too simple and misses important data patterns. High variance means it’s too complex and remembers the training data’s noise.

Consequences of Overfitting

Overfitting hurts the reliability and performance of machine learning models. It makes them bad at predicting on new data. It also makes them less able to handle changes in input data.

Overfit models might also make more false positives or false negatives. This is because they’re too focused on the wrong details. Plus, they’re computationally costly because they spend time on noise instead of important patterns.

To avoid overfitting and make machine learning models better, we need to understand why it happens. We also need to know how to stop it. The next parts of this article will explore these topics in more detail.

Causes of Overfitting in Machine Learning

Overfitting in machine learning is a big problem. It happens when a model gets too complex and learns the noise in the data. This makes the model perform well only on the training data, not on new data.

Model Complexity

A complex model is often the main cause of overfitting. Too many parameters in a model let it fit the training data too well. This means it does great on the training data but fails with new data.

Inadequate Data

Not enough training data can also lead to overfitting. A small dataset makes it hard for the model to learn the real patterns. Instead, it memorizes the training data, leading to poor performance on new data.

Noisy Data

Noisy or irrelevant data in the training set can harm a model’s learning. Data with errors or outliers can confuse the model. This makes it fit to these irrelevant features, causing overfitting.

Lack of Regularization

Not using regularization can also cause overfitting. Regularization, like L1 or L2, keeps the model simple. This helps it not overreact to the training data.

Sensitivity to Outliers

Models that are too sensitive to outliers are more likely to overfit. Outliers can greatly affect the model’s parameters. This makes it learn patterns that don’t really exist.

Improper Feature Engineering

Using irrelevant or redundant features can also cause overfitting. Good feature engineering is key. It involves choosing and transforming the most important features for effective generalization.

Understanding these causes helps machine learning experts tackle overfitting. They can then build more reliable and robust predictive models.

Detecting Overfitting

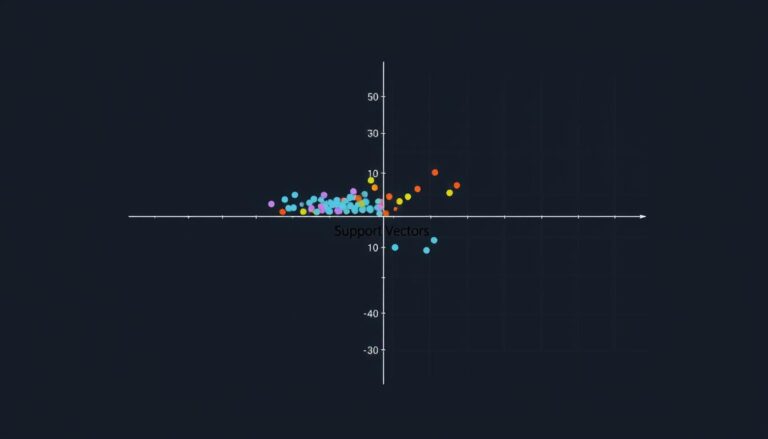

One key way to check if a Predictive Model Evaluation works well is the Train-Test Split method. This method splits the data into training and test sets. The model is trained on the training data and then tested on the test data.

If the model does much better on the training data than the test data, it shows overfitting.

Train-Test Split

The training set usually has 80% of the data. It’s used to train the model. The test set, with about 20% of the data, checks the model’s accuracy.

If the model does better on the training set than the test set, it’s overfitting.

Cross-Validation

Cross-Validation is another good way to spot overfitting. It divides the training data into many parts. The model is trained and tested on each part in turn.

This method gives a better idea of how well the model will do on new data. It also helps in finding the best settings for the model without using the Holdout Set.

| Technique | Description | Benefits |

|---|---|---|

| Train-Test Split | Dividing the dataset into training and testing subsets | Provides a clear indication of overfitting by comparing model performance on the two sets |

| Cross-Validation | Iteratively training and evaluating the model on different data folds | Offers a more robust estimate of generalization performance and enables effective hyperparameter tuning |

It’s very important to find and fix overfitting. This ensures machine learning models can make good predictions on new data.

Preventing Overfitting in Machine Learning Models

To fight overfitting in machine learning, several methods are used. These strategies add constraints, regularize the model, or increase the training data. This leads to models that work better on new data.

Regularization Techniques

Regularization is key in avoiding overfitting. It adds penalties to the model’s loss function. This encourages simpler, more general patterns. L1 Regularization (Lasso) and L2 Regularization (Ridge) are two main types.

L1 Regularization adds the absolute values of coefficients, helping to select features. L2 Regularization squares the coefficients, penalizing large values.

Data Augmentation

Data augmentation helps prevent overfitting too. It expands the training data by applying transformations like rotation or flipping. This makes the model learn more general patterns.

Feature Selection

Picking the right features can also reduce overfitting. Techniques like Recursive Feature Elimination help find the most important features. This makes the model better at handling new data.

Ensemble Methods

Ensemble methods like bagging and boosting are also useful. Bagging trains many learners and combines their predictions. This smooths out the model’s output, reducing noise.

Boosting trains learners one after another, focusing on previous mistakes. The final ensemble is more flexible and generalizable than individual models.

| Model | Training Accuracy | Test Accuracy | Overfitting Diagnosis |

|---|---|---|---|

| A | 99.9% | 95% | Likely overfitting |

| B | 87% | 87% | No overfitting |

| C | 99.9% | 45% | Severe overfitting |

Using these techniques, machine learning experts can prevent overfitting. They create models that perform well on new data.

Conclusion

Overfitting is a big problem in machine learning. It affects how well models work and how reliable they are. Knowing why overfitting happens helps us make better models.

There are ways to fight overfitting. Regularization, data augmentation, and feature selection are some of them. Ensemble methods also help. These techniques make sure models work well with new data.

It’s important to keep checking how well models perform. We should test them a lot and change our plans if needed. This way, we can make sure models are useful for everyone.

The world of machine learning is always changing. It’s key for researchers to share their findings with everyone. We also need to teach AI basics in schools. Governments should help make sure AI is used for good.

By staying alert and working together, we can make the most of machine learning. This will help us make progress for everyone.