What Is Dependency Parsing, and How Does It Aid in Sentence Structure Analysis?

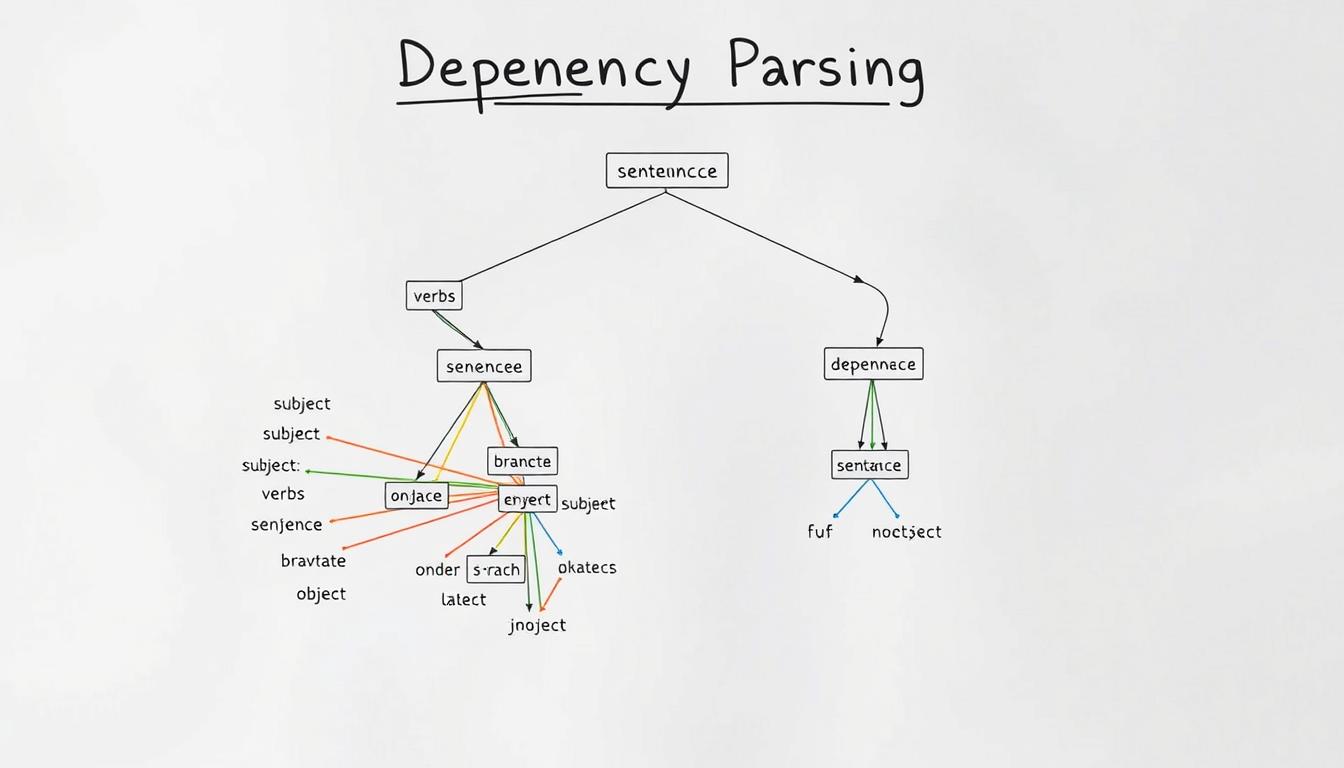

In the world of natural language processing (NLP), dependency parsing is a key technique. It shows how words in a sentence relate to each other. This helps us understand the complex structure of language.

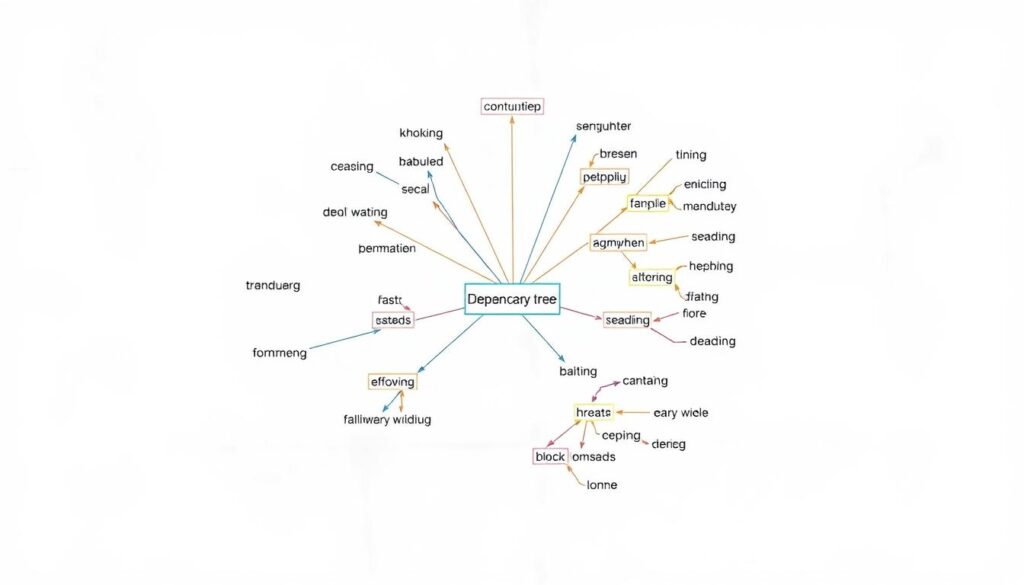

Dependency parsing creates a tree-like structure to show these word relationships. It helps researchers and developers grasp the syntax and meaning of text better.

This method focuses on direct word connections, called dependencies. These connections are shown as directed arcs in a dependency structure. It’s especially useful for languages with complex word structures and flexible word order.

As companies delve into advanced tech like NLP, dependency parsing’s importance grows. It helps systems understand word relationships. This makes software more intelligent and user-friendly, like smart chatbots for customer service.

Understanding the Fundamentals of Dependency Parsing

Dependency parsing is a key part of syntactic parsing. It looks at how words in a sentence relate to each other. Unlike other methods, it focuses on the direct links between words, making sentence structure clearer.

Core Components of Dependency Structure

At the center of dependency parsing is a directed acyclic graph (DAG). This graph shows how words are connected. It has a root node, heads, dependents, and typed dependency relations that link them.

This structure is different from others because it doesn’t use nodes for phrases or parts of speech. It focuses on the direct connections between words.

Types of Grammatical Relations

The Universal Dependencies project has listed many types of dependency relations. These include clausal argument relations and nominal modifier relations. They help show the syntactic structure and semantic relationships in sentences.

The Role of Head-Dependent Relationships

Head-dependent relationships are key in dependency parsing. The head word is the main word in a group, and dependents rely on it. These relationships help with tasks like finding who or what is being talked about in a sentence.

Natural Language Processing and Dependency Trees

Dependency trees are key in Natural Language Processing (NLP). This field mixes computational linguistics and artificial intelligence. It helps us understand how languages work with technology. Dependency trees help with many NLP tasks by showing sentence structure.

These trees are great for languages with flexible word order. Tools like the Natural Language Toolkit (NLTK) or the Stanford parser build them. They use machine learning to show word relationships in sentences.

Dependency parsing has many benefits. It helps with speech recognition by understanding spoken language. It also improves text analysis and natural language generation. Dependency trees are key for tasks like machine translation and sentiment analysis.

As artificial intelligence and NLP grow, so will dependency parsing’s role. It helps us understand language better. This leads to more accurate language systems, improving human-machine communication.

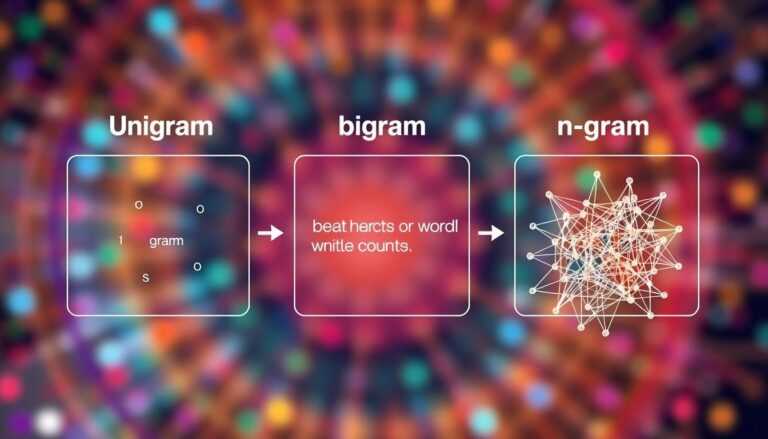

| Key Concepts in Dependency Parsing | Description |

|---|---|

| Projective Dependency Structure | A type of dependency structure where dependencies do not cross, resulting in a more straightforward and tree-like representation. |

| Non-Projective Dependency Structure | A more complex dependency structure that allows for crossing dependencies, capturing more nuanced linguistic relationships. |

| Transition-Based Parsing | A parsing approach that uses a state machine to incrementally build the dependency tree by applying a sequence of transitions (e.g., Shift, Left-Arc, Right-Arc). |

| Neural Network Models | Advanced machine learning techniques that leverage neural networks to enhance the accuracy and efficiency of dependency parsing. |

Common Dependency Relations and Their Applications

Dependency parsing is key in Natural Language Processing (NLP). It helps us understand sentence structure. Each word is a node in a tree, showing how they relate to each other.

Clausal Argument Relations

Clausal argument relations show the syntactic roles of words around a predicate, like a verb. Relations like nsubj (nominal subject) and dobj (direct object) are important. They help us see the main actors and actions in a sentence.

Nominal Modifier Relations

Nominal modifier relations explain how words modify their heads. Types include amod (adjectival modifier) and det (determiner). These help us grasp the fine details in sentences.

Special Case Relations and Usage

Special case relations, like conj (conjunct), are for coordination and complex sentences. They’re vital for breaking down compound sentences. This lets us understand the ties between different parts of a sentence.

Dependency parsing is used in many areas, like information extraction and machine translation. It helps computers grasp language better. This leads to better performance in NLP tasks.

Practical Applications in Text Analysis

Dependency parsing is key in natural language processing (NLP). It has many uses in text analysis. It’s used in machine translation, sentiment analysis, and text generation.

In machine translation, it helps understand sentence structure in different languages. This leads to more accurate translations. It keeps the original meaning and grammar intact.

For sentiment analysis, it’s crucial. It shows how opinion words relate to their subjects. This lets systems spot the sentiment towards certain topics or entities.

In text generation, it ensures sentences are correct and flow well. This is key for chatbots to sound natural. It makes human-computer talks smoother.

Dependency parsing is also vital in info extraction, question-answering, and conversational AI. It helps these systems understand and answer user queries better. They provide more relevant and contextual info.

Dependency parsing’s role in text analysis shows its value in NLP. As AI and machine learning grow, its importance will too. It will make text-based interactions and analysis even better.

Conclusion

Dependency parsing is a key part of Natural Language Processing (NLP). It changes how we look at sentence structure. This tool shows the detailed connections between words, giving us deep insights into language.

As NLP grows, dependency parsing stays essential. It helps with many tasks like machine translation and sentiment analysis. It’s also vital for understanding market trends.

This method is great at dealing with complex language structures. It helps machines understand language better. It shows how words relate to each other, making meaning clearer.

Dependency parsing is vital in Artificial Intelligence. It improves virtual assistants and search results. It also makes chatbots smarter, shaping how we talk to machines.