How Does Feature Selection Impact Model Performance in Machine Learning?

Feature selection is key in Machine Learning and Artificial Intelligence. It finds and picks the most important variables from a dataset. This makes the model better, easier to understand, and more efficient.

By choosing the right features, it cuts down on data, boosts accuracy, and makes the model stronger. It’s very important for ethical AI in healthcare and finance. It helps focus on important data and avoid biased or sensitive information.

Feature selection is very important in Machine Learning. It saves time and resources by making the model work faster. It also makes the model more effective.

By removing unneeded data, it stops the model from getting too specific. This is a big problem in Data Mining. It’s crucial for making models that are accurate and reliable. These models can really help businesses and society.

Understanding Feature Selection Fundamentals

In machine learning, feature selection is key. It finds the most important variables for models. This process makes models better and easier to understand.

It’s important for both supervised and unsupervised learning. It affects how models are made and used.

Defining Feature Selection in Data Science

Feature selection picks the most important variables from a dataset. It makes data easier to work with and improves model performance. This helps avoid overfitting and boosts accuracy.

The Role of Features in Model Development

Features are the heart of machine learning models. They are the data’s characteristics used for predictions. Choosing the right features is vital for good model performance.

Key Objectives of Feature Selection

- Reducing Overfitting: It removes unnecessary features to prevent overfitting.

- Improving Accuracy: It focuses on the most useful features for better predictions.

- Speeding up Training and Inference: Fewer features mean faster model training and use.

- Facilitating Better Understanding: It reveals how input features relate to the target variable.

Feature selection is vital in machine learning. It greatly affects model performance, efficiency, and understanding. Data scientists use it to create better models.

Benefits of Feature Selection in Machine Learning

Feature selection brings many benefits to machine learning. It helps pick the most important features, making models better, less prone to overfitting, and easier to understand. This method also saves computer resources and helps deal with the curse of dimensionality.

One key advantage is better model accuracy. By removing unneeded features, models focus on what matters most. This is especially true in Unsupervised Learning and Natural Language Processing, where there are many variables.

Feature selection also helps avoid overfitting in big datasets. It picks the best features, making the model simpler. This simplicity helps the model generalize better, working well on new data.

Another big plus is clearer model explanations. By finding key features, we understand data better. This is crucial for building trust and sharing results effectively.

Feature selection also makes models faster. With fewer variables, training and using the model takes less time. This is great for projects with tight deadlines or limited resources.

In short, feature selection greatly improves machine learning models. It boosts performance, efficiency, and clarity. By choosing the right features, we gain insights, make better decisions, and advance our fields.

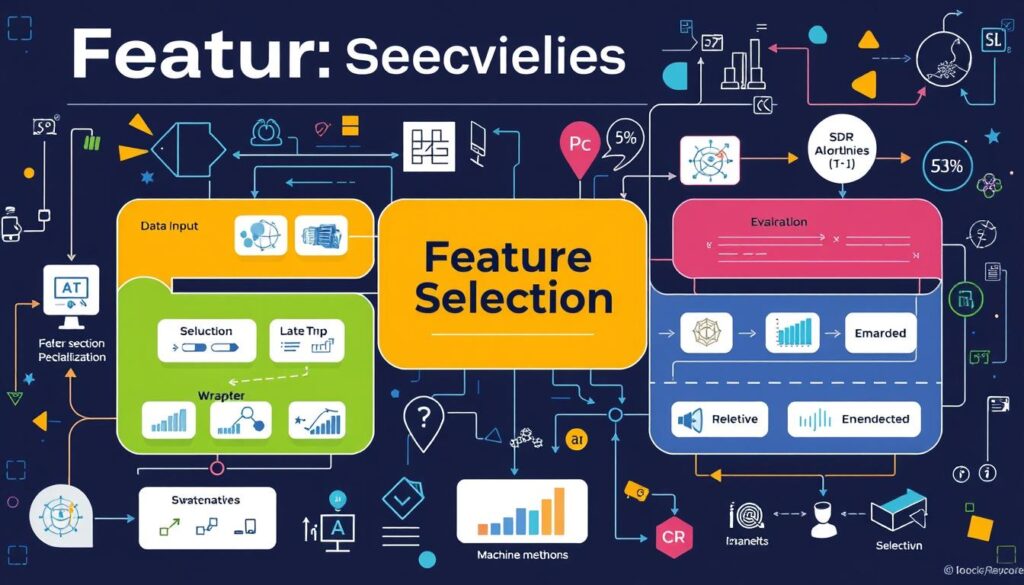

Types of Feature Selection Methods

In machine learning, feature selection is key to better model performance and efficiency. There are three main types: filter, wrapper, and embedded methods. Each has its own strengths and fits different needs, based on the dataset size and the task at hand.

Filter Methods and Statistical Approaches

Filter methods use stats like correlation and variance to pick features. They work alone, without the model’s help. This makes them fast and good for big datasets. But, they might miss important feature connections.

Wrapper Methods for Feature Optimization

Wrapper methods check how well features work together in the model. They try different combinations to find the best. This method is more detailed but takes longer to run.

Embedded Methods in Model Training

Embedded methods pick features while training the model. They use Lasso or Ridge regression. This is quicker than wrapper methods and helps understand the model better.

Choosing the right method depends on the dataset and the task. Knowing each method’s strengths and weaknesses helps data scientists improve models. This tackles issues like too many features and overfitting.

| Feature Selection Method | Approach | Strengths | Weaknesses |

|---|---|---|---|

| Filter Methods | Use statistical criteria (correlation, variance, mutual information) to evaluate features independently of the model. |

|

|

| Wrapper Methods | Evaluate feature subsets based on model performance, iteratively adding or removing features. |

|

|

| Embedded Methods | Integrate feature selection with model training, using techniques like Lasso or Ridge regression. |

|

|

Impact on Model Performance and Efficiency

Feature selection is key to making machine learning models better and faster. It picks the most important features, saving time and resources. This means the model can learn and make decisions quicker and more accurately.

Computational Resource Optimization

By cutting down data size, feature selection saves a lot of computer power. This is super important in Data Mining and Artificial Intelligence. It makes models work faster, helping them make decisions quicker.

Model Accuracy and Precision Improvements

Getting rid of useless data makes models more accurate. They can spot patterns better, leading to better predictions. For example, machine learning has boosted supply chain forecasting by up to 50% and cut down lost sales by up to 65%.

Reducing Overfitting Through Feature Selection

Feature selection also fights overfitting, a big problem in machine learning. It removes data that might confuse the model, making it work better with new data. This is seen in how predictive maintenance cuts costs by up to 30% and boosts production by up to 25%, as Accenture reports.

Feature selection really makes a difference in how well models perform. It improves accuracy, cuts down training time, and makes models more efficient. It’s a crucial step in creating effective machine learning solutions.

Implementing Feature Selection Techniques

In the world of Machine Learning and Neural Networks, picking the right features is key. This step uses tools like scikit-learn in Python to find the most important features from a dataset.

There are several ways to choose features:

- Univariate Selection (e.g., SelectKBest), which looks at each feature’s link to the target variable.

- Correlation Matrix Analysis, which finds the strongest connections between features.

- Recursive Feature Elimination (RFE), which removes the least important features one by one.

- Lasso Regression, which uses L1 regularization to keep only the most important features.

- Feature Importance from Tree-based Models, which uses algorithms like Random Forests or Gradient Boosting to find important features.

The right method depends on the problem, the data, and how much computing power you have. Usually, a mix of methods and cross-validation is used. This makes sure the chosen features are reliable and easy to understand.

It’s also important to think about how well these features fit the real world. This ensures the model works well and is useful in practice.

Conclusion

Feature selection is key in machine learning. It greatly affects how well models work, how fast they are, and how easy they are to understand. By picking the right features, data scientists can make their models better, quicker, and more reliable.

The right method for selecting features depends on the project’s needs, the data, and the computer power available. As machine learning grows, so does the need for good feature selection. It helps make AI systems better, fairer, and easier to understand.

There are many ways to choose features, like filter methods, wrapper methods, and embedded methods. These help data scientists improve their models. They also help solve problems like using less computer power, making models more accurate, and avoiding overfitting.

In the future, using feature selection will be even more important. It will help make machine learning work well, even when resources are limited. Data scientists who keep up with new feature selection techniques can make AI do amazing things. They can create solutions that help people and society.