What Are Hyperparameters, and How Do You Optimize Them in Machine Learning?

In Machine Learning and Artificial Intelligence, hyperparameters are key. They decide how well predictive models work. These settings are chosen before training starts and shape the model’s behavior.

Hyperparameters affect many things, like how many nodes a neural network has. They also influence the learning rate and model complexity. Finding the right hyperparameters is crucial for top model performance.

Optimizing hyperparameters means trying different settings. This can be done manually or with automated tools. Methods like Bayesian optimization and grid search help find the best settings for a task.

As Machine Learning grows, so does the need for good hyperparameter tuning. By getting better at tuning, experts can make their models more powerful. This helps solve tough problems and leads to big advances in Artificial Intelligence and Predictive Analytics.

Understanding the Fundamentals of Hyperparameters in Machine Learning

In machine learning, hyperparameters are key to a model’s success. They are not learned from data like parameters are. Instead, they are set before training and guide the learning process.

Difference Between Parameters and Hyperparameters

Parameters are the model’s internal variables that change during training. They help reduce the model’s error on training data. Examples include coefficients in certain models and the weights and biases in neural networks.

Hyperparameters, however, are set before training starts. They control how the model learns and behaves.

Key Characteristics of Hyperparameters

- Hyperparameters shape the model’s structure, like the number of hidden layers in neural networks or the size of convolutional layers’ kernels.

- They also influence the learning process, including the learning rate and the choice of cost or loss function.

- Hyperparameters can affect the model’s complexity. For example, the dropout rate in neural networks helps prevent overfitting or underfitting.

Impact on Model Performance

Choosing the right hyperparameters is crucial for machine learning success. They greatly impact how well a model performs. Metrics like Mean Squared Error (MSE) for regression and Accuracy, Precision, Recall, and F1-score for classification are affected.

Good hyperparameter tuning is essential for data science success. In fact, 85% of successful projects attribute their success to understanding model parameters and their effects.

Common Types of Hyperparameters and Their Functions

In machine learning, hyperparameters are key. They shape how models work and perform. These values are set before training and greatly affect learning and generalization. Let’s look at some common hyperparameters and what they do.

Learning Rate is vital for many models, especially those using gradient descent. It controls the step size at each iteration. The Number of Epochs decides how many times the algorithm goes through the training data. This affects overfitting and underperforming. Batch Size is how many examples are used in one iteration. It impacts learning speed and stability.

In neural networks, Number of Hidden Layers and Units are crucial. They define the model’s complexity. Regularization Parameters prevent overfitting by adding a penalty term. The strength of this penalty is controlled by a parameter. Momentum helps by considering past gradients in weight updates, often in stochastic gradient descent.

Other important hyperparameters include the Activation Function in neural networks. It decides the neuron’s output based on inputs. Also, there are optimization techniques like Grid Search, Random Search, and Bayesian Optimization. Each has its own benefits and uses.

Getting these hyperparameters right can greatly improve Computer Vision, Data Mining, and Unsupervised Learning models. It ensures they work well and can find important insights in complex data.

Essential Hyperparameter Optimization Techniques

In the quest to build high-performing machine learning and deep learning models, hyperparameter optimization is key. Hyperparameters control a model’s behavior. Finding the right values can greatly improve performance.

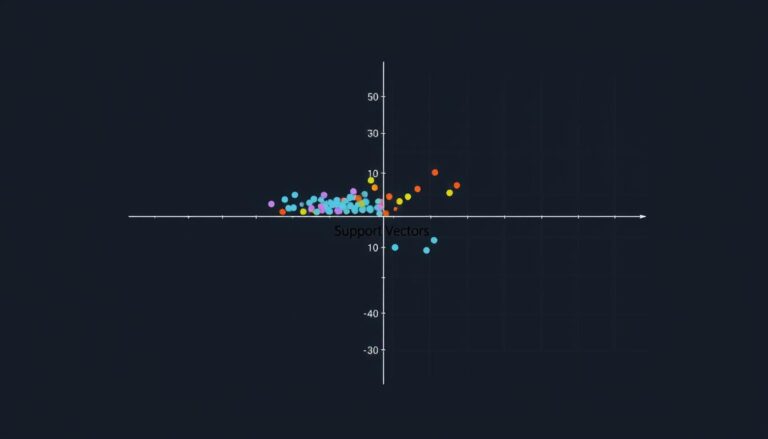

Grid Search Method

The Grid Search method is a common technique. It systematically tests many hyperparameter combinations. This method is thorough but can be slow, especially with many parameters.

Random Search Optimization

Random Search is a faster alternative. It randomly tries different hyperparameter combinations. It often finds good models faster than Grid Search.

Bayesian Optimization Approach

Bayesian Optimization is another advanced method. It builds a model to predict the best hyperparameters. This is great for complex problems where testing is expensive.

Using these techniques, along with a deep understanding of the problem, can unlock a model’s full potential. This leads to top-notch performance.

Advanced Machine Learning Optimization Strategies

Machine learning is growing fast, and new ways to make models better are being found. These new methods help with complex tasks like neural networks, natural language processing, and computer vision. They offer fresh solutions to old problems.

Hyperband is one such strategy. It uses smart resource management and early-stopping to find the best settings quickly. This method balances exploring new options and focusing on the best ones.

Population-based Training (PBT) is another advanced technique. It combines parallel and sequential optimization. This allows hyperparameters to change during training, helping the model get better and adapt to data.

The Bayesian Optimization and HyperBand (BOHB) method is a mix of Bayesian optimization and Hyperband. It uses Bayesian’s data-driven approach and Hyperband’s efficiency. This makes it a strong choice for complex models.

| Optimization Strategy | Key Features | Strengths |

|---|---|---|

| Hyperband | Adaptive resource allocation, early-stopping | Quickly identifies promising hyperparameter configurations |

| Population-based Training (PBT) | Parallel search, sequential optimization, dynamic hyperparameter adaptation | Continuous model improvement during training |

| Bayesian Optimization and HyperBand (BOHB) | Combines Bayesian optimization and Hyperband | Data-driven optimization with efficient resource utilization |

These advanced strategies aim to make hyperparameter tuning faster and more efficient. They are especially useful for complex neural networks, natural language processing models, and computer vision tasks. By using these new methods, experts can unlock machine learning’s full potential, leading to big breakthroughs in many fields.

Conclusion

Hyperparameter optimization is key in machine learning. It finds the best hyperparameters to boost model performance. Techniques range from simple grid search to advanced Bayesian optimization.

Choosing the right hyperparameters can greatly improve a model’s accuracy and efficiency. It’s a crucial skill for anyone working in machine learning.

Predictive Analytics, Data Mining, Supervised Learning, and Unsupervised Learning are all part of optimizing hyperparameters. By understanding hyperparameters and using the right techniques, models can give better insights. This makes Supervised and Unsupervised Learning more reliable.

As Artificial Intelligence grows, so does the need for hyperparameter optimization. It’s important for practitioners to keep up with new strategies. By using these strategies, they can make their models better, leading to new discoveries in various fields.