How Do Recurrent Neural Networks (RNNs) Work in Sequence Prediction?

In the world of machine learning and artificial intelligence, Recurrent Neural Networks (RNNs) are key. They handle sequential data like time series, text, and speech. Unlike regular neural networks, RNNs can process data step by step. This helps them understand the order of data, making them great for predicting sequences.

RNNs use their internal memory, or hidden states, to keep track of past data. This lets them learn and remember complex patterns in data. They’re perfect for tasks like predicting stock prices, understanding speech, and processing natural language.

RNNs use machine learning and deep learning to solve many sequence-related problems. They offer new ways to tackle challenges in different areas. As artificial intelligence grows, RNNs will be even more important for making predictions and creating smart systems.

Understanding the Fundamentals of RNNs and Sequential Data

Recurrent Neural Networks (RNNs) are great at handling sequential data like time series, text, and speech. They are different from Feedforward Neural Networks (FNNs) because RNNs use their memory to understand the order of data.

Types of Sequential Data Patterns

Sequential data has a clear order and connections. RNNs are good at finding patterns in this data, such as:

- Trends

- Seasonality

- Cyclic patterns

- Noise and irregularities

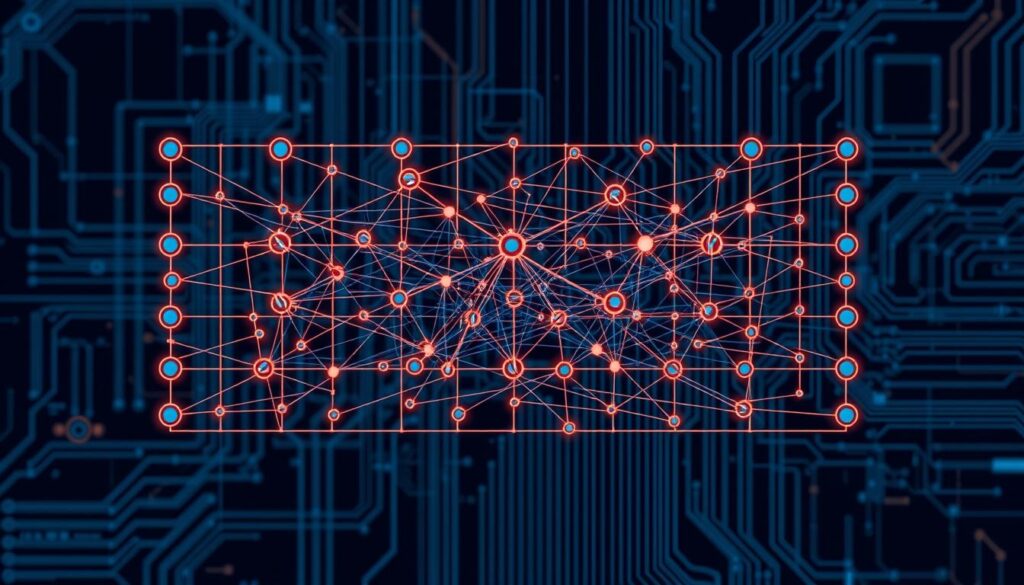

Core Components of RNN Architecture

An RNN has three main parts:

- Input layer: It gets the sequential data

- Hidden layer: It processes the data and keeps a hidden state of past inputs

- Output layer: It makes predictions based on the current data and the hidden state

The Role of Hidden States in RNNs

The hidden state in an RNN is like its memory. It helps the network remember and use past information. This makes RNNs very good at tasks like Natural Language Processing, Time Series Analysis, and other Sequential Data tasks.

Machine Learning and Neural Network Basics

Machine learning is a key tool for analyzing data and making predictions. At its heart are artificial neural networks, modeled after the brain. These networks have layers of nodes that learn from data through backpropagation. Knowing these basics is key to understanding Recurrent Neural Networks (RNNs) in sequence prediction.

Machine learning falls into two main types: Supervised Learning and Unsupervised Learning. Supervised Learning uses labeled data to train models. This way, the models learn the right patterns. Unsupervised Learning, however, finds patterns in data without labels.

Artificial Neural Networks are central to deep learning. They have input, hidden, and output layers. More hidden layers mean the network can handle tougher tasks. The more data a network is trained on, the better it gets.

Machine learning and neural networks have changed many fields. They power virtual assistants and catch fraud in real-time. As tech grows, so will the uses of these tools, shaping our future decisions.

The Architecture of Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are a type of deep learning model. They are made to handle sequential data well. Unlike regular neural networks, RNNs can remember past inputs and use that information.

Input Layer Processing

RNNs take in data one piece at a time. The input layer gets data, like a word or a video frame. This is key for tasks like understanding language and analyzing time series data.

Hidden Layer Mechanisms

The hidden layer is very important in RNNs. It mixes the new input with the old hidden state. This creates a new state that helps make predictions.

RNNs can remember long-term patterns in data. This is a big plus over other neural networks.

Output Generation Process

The output layer makes predictions based on the hidden state. These predictions can be for many tasks, like classifying or predicting values. The network then updates its state to make even better predictions.

RNNs are great at handling sequential data. They update their states and make predictions. This makes them very useful in Deep Learning, Neural Network Architecture, and Sequence Modeling.

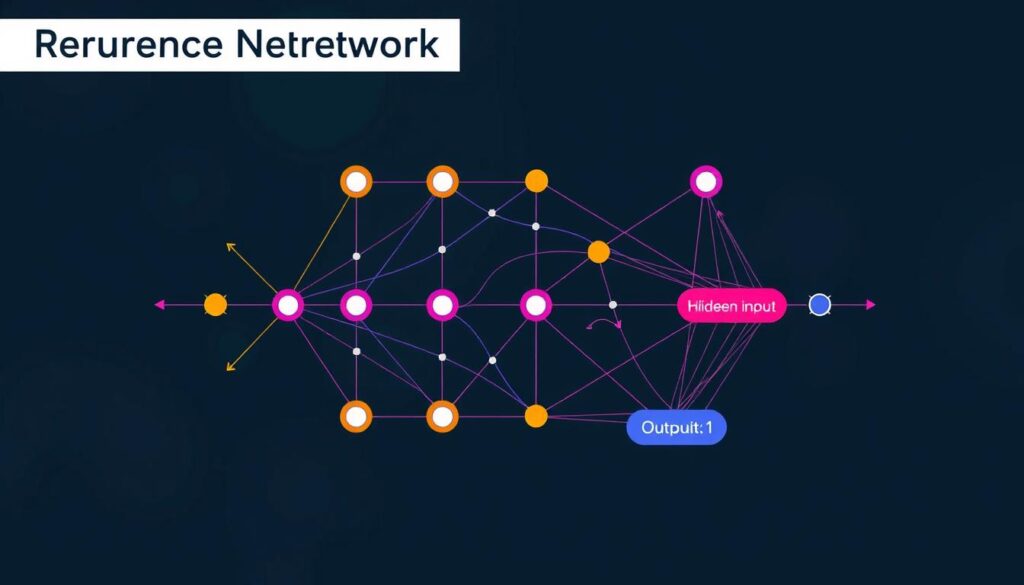

Memory Capabilities and Information Processing in RNNs

Recurrent Neural Networks (RNNs) have amazing memory skills. They can keep track of long-term patterns in data. The hidden state in RNNs acts like a memory, keeping important info from past steps. This helps RNNs understand context and make smart predictions, especially with Temporal Information and Contextual Learning.

But, standard RNNs face a big challenge with very long sequences. This is known as the vanishing gradient problem. To solve this, new types of RNNs like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) were created. These models have special Long-term Dependencies features to handle long sequences better.

| RNN Capabilities | Advantages | Challenges |

|---|---|---|

| Memory Retention | Ability to remember and utilize past information for informed decision-making | Potential issues with long sequences due to vanishing gradients |

| Contextual Understanding | Enhanced comprehension of sequential data and improved prediction accuracy | Complexity in training and optimizing RNN models |

| Versatility in Applications | Successful deployment in diverse domains like natural language processing, speech recognition, and financial forecasting | Computationally intensive nature compared to traditional neural networks |

RNNs are a key player in deep learning because of their memory and skill in handling Temporal Information and Contextual Learning. They’ve led to big breakthroughs in many fields and areas.

Addressing the Vanishing Gradient Problem

The vanishing gradient problem is a big challenge in training deep neural networks, like Recurrent Neural Networks (RNNs). As networks get deeper, the gradients during backpropagation can become very small. This makes it hard for the network to learn long-term dependencies well.

LSTM Networks

Long Short-Term Memory (LSTM) networks were introduced by Sepp Hochreiter and Jürgen Schmidhuber in 1997. They are a special type of RNN that tackles the vanishing gradient problem. LSTMs have memory cells and gating mechanisms. These help the network remember and forget information, making it better at learning long-term dependencies in sequential data.

Gated Recurrent Units (GRU)

Gated Recurrent Units (GRUs) are a simpler version of LSTMs. They also aim to solve the vanishing gradient problem. GRUs use a gating mechanism to control information flow. This helps the network keep and update its hidden state more effectively during training.

Advanced Solutions and Techniques

Other techniques have been developed to tackle the vanishing gradient problem. These include proper weight initialization methods and gradient clipping. Gradient clipping prevents gradients from getting too small or exploding. Also, using skip connections in network architectures, like Residual Networks (ResNets), helps. These solutions make training more stable and improve the network’s ability to learn complex features from sequential data.