What is the difference between a unigram, bigram, and n-gram model?

In natural language processing, unigrams, bigrams, and n-grams are statistical models. They help predict the probability of word sequences. These models uncover patterns in text, aiding in tasks like language modeling and sentiment analysis.

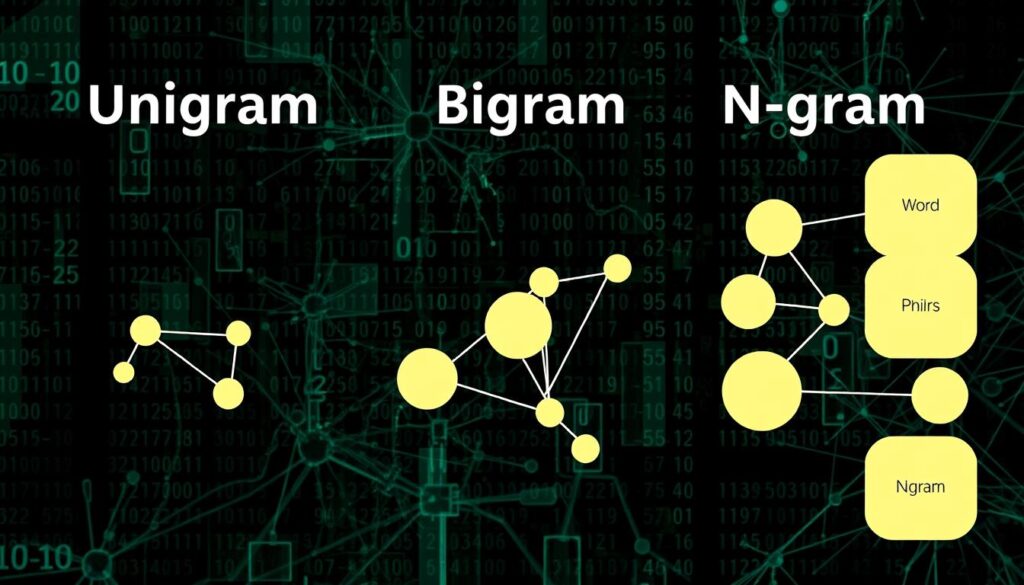

A unigram model looks at each word alone, ignoring the word before it. Bigrams, on the other hand, consider the word before to guess the next word. N-grams use the last n-1 words to predict the next one, assuming it depends only on those words.

Choosing the right n-gram model depends on the task’s complexity. Unigrams are simple, while bigrams and n-grams offer more context. However, as n increases, so does the complexity and resource needs.

Introduction to Language Models

Language models are key in natural language processing (NLP). They help machines understand, create, and analyze human language. These models learn to guess the next word in a sentence by doing a probability calculation.

Language Models and their Importance

Language models are used in many NLP tasks. They help with writing, tagging words, answering questions, summarizing texts, and more. They are vital for making systems that can talk to us like humans.

Probabilistic Language Models

Statistical language models use patterns to guess word sequences. They look at groups of words, like two-word pairs, to predict what comes next. This helps pick the most likely word sequences.

Neural language models, like RNNs and Transformers, use neural networks to guess word sequences. RNNs remember what came before to predict the next word. This helps with long word connections in language.

Language models have grown from simple models to complex ones like GPT-3 and T5. These new models can create text that makes sense and is relevant. They show how far NLP has come.

N-Gram Language Models Explained

N-gram language models help predict the chance of a word sequence. They use the Markov assumption. This means a word’s chance depends only on the n-1 words before it. This makes it easier to calculate than using the whole word history, which is hard because of language’s creativity.

Unigram, Bigram, and Trigram Models

The unigram model looks at each word alone, ignoring context. The bigram model examines pairs of words. And the trigram model looks at sequences of three words. Models like 4-grams or 5-grams can handle longer sequences but get complex and face data sparsity.

Markov Assumption and N-Gram Models

The Markov assumption says a word’s chance depends only on the n-1 words before it. This makes calculating probabilities easier. N-gram models are effective in tasks like language modeling and machine translation, despite this simplification.

| Model | Probability Calculation | Example |

|---|---|---|

| Unigram | P(w_i) | P(“the”) |

| Bigram | P(w_i|w_{i-1}) | P(“quick”|”the”) |

| Trigram | P(w_i|w_{i-2}, w_{i-1}) | P(“brown”|”the”, “quick”) |

Natural Language Processing with N-Grams

N-gram language models are key in natural language processing (NLP). They are used in many areas, like machine translation and spell checking. They also help in speech recognition and text prediction. These models assign probabilities to word sequences, helping choose the best interpretation of unclear text.

N-gram models are great at understanding text context and patterns. Common types of N-grams include unigrams (n=1), bigrams (n=2), and trigrams (n=3). Unigrams are single words, while bigrams and trigrams are pairs and triplets of words. These sequences help language models get better at understanding and predicting text.

In natural language processing, N-gram models are excellent at predicting text. They guess the next word based on what comes before it. This is super useful for predictive text on keyboards and phones. N-grams also help in finding and ranking documents based on their relevance.

However, N-gram models have some challenges. As ‘n’ gets bigger, the number of N-grams grows fast, causing storage and data sparsity problems. Researchers are working on using data lakehouses to handle these issues.

In summary, N-gram models are vital in natural language processing. They help in many applications that use language modeling and text analysis.

Estimating N-Gram Probabilities

Language models assign probabilities to words or sentences. This is key for correcting errors and in communication systems. The base of these models is the n-gram, like bigrams or trigrams.

Maximum Likelihood Estimation

Maximum likelihood estimation (MLE) is used to find n-gram probabilities. It counts n-grams in a training corpus and normalizes them. For example, to find bigram probabilities P(wn|wn-1), we use the count of the bigram C(wn-1wn) divided by the count of the unigram C(wn-1). This method estimates model parameters from data, without needing to model language processes.

Example of Estimating Bigram Probabilities

Consider a training corpus with these sentences:

- “Thank you so much for your help”

- “I really appreciate your help”

- “Excuse me, do you know what time it is?”

- “I’m really sorry for not inviting you”

- “I really like your watch”

To find bigram probabilities, we count each bigram and normalize. For instance, “really like” has a count of 1. The unigram “really” has a count of 2. So, the probability of “really like” is 1/2 = 0.5.

| Bigram | Count | Probability |

|---|---|---|

| thank you | 1 | 0.33 |

| you so | 1 | 0.33 |

| so much | 1 | 0.33 |

| really appreciate | 1 | 0.5 |

| do you | 1 | 0.5 |

| you know | 1 | 0.5 |

| really sorry | 1 | 0.5 |

| really like | 1 | 0.5 |

This MLE method is key in natural language processing. It helps create powerful language models for many applications.

Conclusion

N-gram language models have been key in natural language processing. They use the Markov assumption to predict word sequences. This makes them useful for many tasks, like machine translation and text generation.

Even though newer models exist, n-grams remain important. They help us understand how language works. This knowledge is vital as NLP keeps getting better.

The future of NLP looks bright. By combining n-grams with new machine learning methods, we’ll see even better results. This will help computers talk and understand us like humans do.