What is BERT (Bidirectional Encoder Representations from Transformers) and how is it used?

BERT is a groundbreaking language model developed by Google AI in 2018. It has changed the game in natural language processing (NLP). Unlike old models, BERT reads text in both directions, understanding left and right context. This lets it grasp language nuances better.

Thanks to this, BERT excels in many NLP tasks. It does well in sentiment analysis, text classification, question answering, and language inference. Its ability to understand context has made it a favorite in search engines like Google.

Google uses BERT to better understand search queries. This leads to more relevant search results. BERT is a big leap in NLP and is now a key part of many AI systems. It shows how important it is for understanding text and improving search engine results.

Introduction to BERT

BERT stands for Bidirectional Encoder Representations from Transformers. It’s a groundbreaking natural language processing (NLP) model from Google. Introduced in 2018, BERT has changed how machines understand language, grasping context and nuances better than ever.

Background and History of BERT

BERT was created to overcome the shortcomings of earlier language models. These models had trouble understanding the complex relationships between words. BERT uses the Transformer neural network to grasp language in a more complete way, changing how machines process natural language.

To train BERT, Google used a huge dataset of 3.3 Billion words. This included Wikipedia and Google’s BooksCorpus. The model was trained to predict missing words in sentences, helping it learn language patterns and relationships.

BERT’s Advantages over Previous Language Models

BERT’s Transformer architecture is different from older models like RNNs and CNNs. It processes data in a non-sequential way, improving language understanding. BERT also uses WordPiece tokenization, breaking words into smaller parts to better grasp language nuances.

BERT has set new records in 11 NLP tasks, outperforming other models and even humans. It comes in different sizes, like BERT-base and BERT-large, to meet various needs.

BERT’s versatility and high performance have made it a key player in NLP. It’s opening doors to more advanced language understanding applications.

How BERT Works

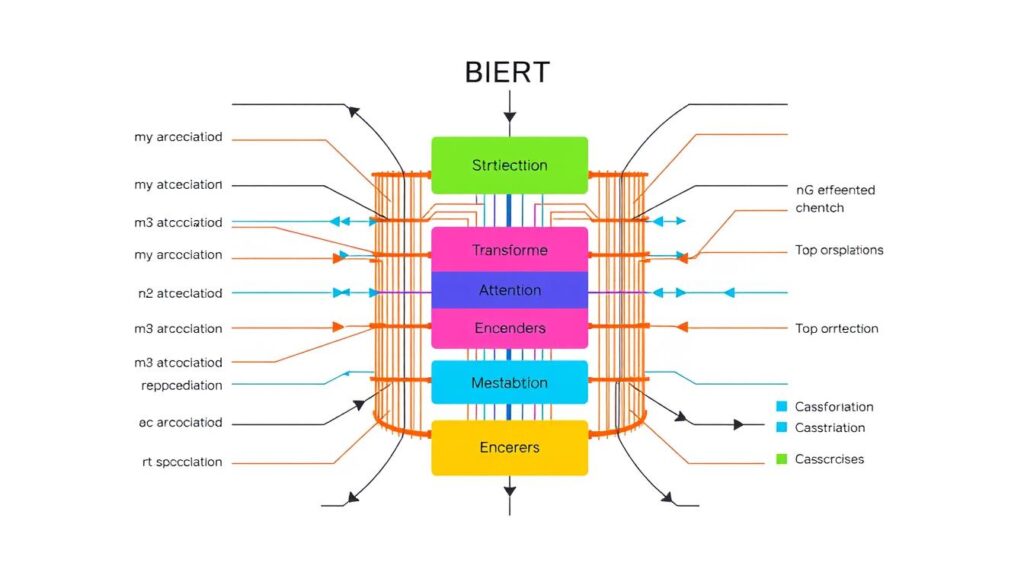

BERT, or Bidirectional Encoder Representations from Transformers, is a groundbreaking language model. It has changed the game in natural language processing (NLP). Its architecture is based on the transformer encoder. This is a deep learning model that uses attention mechanisms, unlike RNNs and CNNs.

Transformer Architecture

The transformer has an encoder and a decoder. But BERT only uses the encoder. It’s designed for understanding language, not for generating text. The BERT architecture uses the transformer encoder to understand word relationships in text.

Masked Language Modeling (MLM)

Masked language modeling (MLM) is a key feature of BERT. During training, BERT randomly masks some input tokens. Then, it tries to guess the masked tokens based on the context. This helps the model learn word relationships and understand language better.

Next Sentence Prediction (NSP)

BERT also uses next sentence prediction (NSP) in its training. It’s trained to guess if two sentences are connected logically. This improves its ability to do tasks like question answering and text classification.

By combining these techniques, BERT becomes a powerful tool in NLP. Its bidirectional nature helps it excel in many tasks.

BERT Architecture and Input Representations

BERT, or Bidirectional Encoder Representations from Transformers, is a top NLP model from Google in 2018. It has two main parts: BERT Base and BERT Large. Each part is designed for different needs in computing and performance.

BERT Base vs. BERT Large

BERT Base has 12 transformer blocks and 12 attention heads. It also has a hidden layer size of 768. On the other hand, BERT Large has 24 transformer blocks, 16 attention heads, and a hidden layer size of 1024. BERT Large usually does better in NLP tasks but needs more computing power.

Token, Segment, and Position Embeddings

BERT’s input is made up of three main parts: token embeddings, segment embeddings, and position embeddings. Token embeddings understand words, segment embeddings separate sentences, and position embeddings show where each word is. This helps BERT grasp the context and connections in text, making it great for many NLP tasks.

| Model | Transformer Blocks | Attention Heads | Hidden Layer Size | Parameters |

|---|---|---|---|---|

| BERT Base | 12 | 12 | 768 | 110M |

| BERT Large | 24 | 16 | 1024 | 340M |

Choosing between BERT Base and BERT Large depends on the NLP task’s needs. It’s about finding the right balance between complexity, computing power, and performance. Knowing how these models work helps improve NLP skills.

Natural Language Processing with BERT

BERT, short for Bidirectional Encoder Representations from Transformers, has changed the game in natural language processing (NLP). It was developed by Google and is now a top choice for many NLP tasks. BERT is great at understanding the context and meaning of each word in a sentence.

One of BERT’s big strengths is its ability to find complex relationships in sentences. Its architecture and multi-layer encoder make it efficient. This means BERT can handle tasks like sentiment analysis and language translation with ease.

BERT is also very versatile. It can be fine-tuned for different tasks, making it even better. This has led to BERT getting top scores on many NLP benchmarks. It’s especially good at tasks like answering questions and understanding language.

Using BERT in NLP makes language processing more accurate. It’s great at figuring out the context of words. This is especially helpful in tasks like language translation, where words can have different meanings.

To use BERT, you first need to prepare your data. This involves breaking text into tokens and turning them into numbers. Then, you train and fine-tune BERT for your specific task. This process uses techniques like backpropagation and gradient descent.

In short, BERT has revolutionized NLP. Its performance and versatility have opened up new possibilities for many applications. It’s a game-changer for advanced language understanding.

| NLP Task | BERT Performance |

|---|---|

| Question Answering | 93.2% F1 score on SQuAD v1.1 |

| Natural Language Understanding | 7.6% absolute improvement on GLUE benchmark |

| Text Classification | State-of-the-art results on 11 NLP tasks |

| Language Translation | Effective in handling ambiguous and polysemous words |

Task-specific Fine-tuning

BERT is made to be flexible, so it can be fine-tuned for many task-specific models. It starts with a pre-trained model and then trains on a smaller dataset for a specific task. This method, called transfer learning, helps BERT quickly learn new tasks and do well.

Because BERT can be fine-tuned easily, it’s very popular in NLP. Places like Hugging Face make it easier for everyone to use transfer learning. This helps small teams and researchers work on big NLP projects and improve language models like BERT.

The BERT fine-tuning method works for many tasks, like classifying text, answering questions, and finding named entities. By using BERT’s deep understanding of language, developers can make models that are both accurate and flexible. This opens up new areas in natural language processing.

Conclusion

BERT was introduced in 2018, changing the game in natural language processing (NLP). It uses transformer models and bidirectional training. This has led to great results in many NLP tasks, like understanding and generating language.

Its ability to grasp context and adapt to different tasks has made BERT a key tool in AI research and industry. This has opened up new possibilities in areas like machine translation and sentiment analysis.

The future of BERT and NLP looks bright. Ongoing research aims to make these models even better. As AI and machine learning grow, BERT and other NLP advancements will be key in how we interact with digital information.

BERT’s success shows the power of new approaches to language modeling. It has the potential to change many industries and applications. As NLP advances, BERT’s impact will likely grow, helping AI systems understand and use human language better.