How do transformers work in NLP tasks like translation and text generation?

Transformer models have changed the game in natural language processing (NLP). They excel in tasks like machine translation and text generation. These models use attention mechanisms to understand word relationships, processing sequences in parallel. This approach has led to top results in many NLP tasks, changing how AI interacts with and creates human language.

At the heart of transformer models is their ability to grasp context-aware representations. This lets them accurately understand language’s meaning and subtleties. By focusing on word relationships, they can better understand the context and create more natural-sounding text. This is especially helpful for tasks like translation and text generation, where keeping the original meaning is key.

Transformer models have grown a lot in recent years, with big names like GPT, BERT, and GPT-3. These models have greatly improved natural language understanding and generation. As researchers keep improving transformer models, we can look forward to even more exciting AI advancements in understanding and creating human language.

Introduction to Transformers

Transformers have changed the game in natural language processing (NLP) since 2017. They excel in tasks like machine translation, text generation, and language modeling. Their success comes from their unique design and the attention mechanism, which helps them grasp context well.

History and Evolution of Transformer Models

Vaswani et al. introduced the transformer architecture in 2017 to boost machine translation. Since then, models like GPT, BERT, and T5 have emerged. These models have greatly advanced language understanding and generation, becoming key in NLP.

Key Advantages of Transformer Architecture

Transformers beat traditional models like RNNs and CNNs in several ways. They can process sequences in parallel, making them faster and more efficient. Their self-attention mechanism also helps them understand language better. Plus, their design allows for easy adaptation to different tasks, making them versatile.

Transformers’ flexibility and scalability have made them leaders in NLP. They’ve led to major breakthroughs in language modeling, text generation, and machine translation.

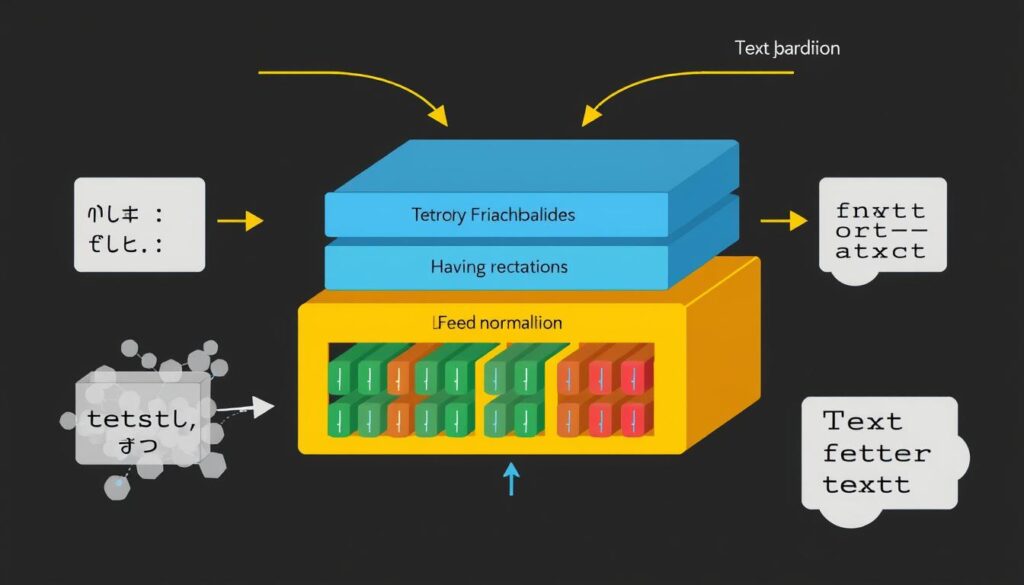

Transformer Architecture Overview

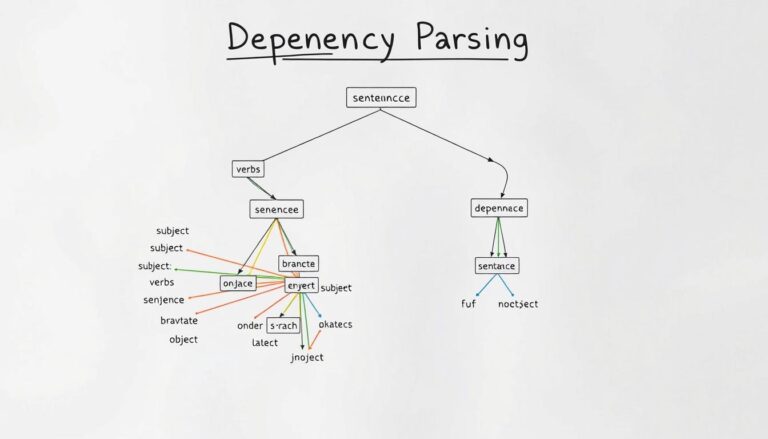

The transformer architecture is built on the encoder and decoder components. The encoder takes in a sequence and creates a detailed representation. This representation captures the data’s nuances and relationships.

The decoder uses this representation and the previous output to create the target sequence.

Encoder and Decoder Blocks

Each encoder and decoder block has multiple transformer layers. These layers use a self-attention mechanism. This mechanism is key to transformers’ success.

It lets each sequence element learn from all others. This way, it captures long-range dependencies and contextual information.

Self-Attention Mechanism

The self-attention mechanism is vital in the transformer architecture. It helps the model understand the importance of each word in the sequence. This is crucial for tasks like machine translation and text generation.

Transformers have changed natural language processing. They offer a more efficient way to process and generate text. The encoder-decoder structure and self-attention mechanism have led to remarkable performance in NLP applications.

Natural Language Processing with Transformers

Transformer models have changed the game in natural language processing (NLP) since 2017. These models learn from huge amounts of text data. They understand language deeply through self-supervised pre-training.

Language Modeling and Pre-training

Pre-training helps transformers grasp language well. They then use this knowledge for many NLP tasks. This method is key in today’s NLP, making models work great for different tasks.

Models trained on big language datasets show amazing skills. They excel in transformers natural language processing, language modeling, and pre-training. They can also be fine-tuned and transfer learned for specific tasks, proving their value.

Experts like Jeremy Howard and Christopher Manning see transformers as game-changers. They’re used in many NLP areas, from writing news to making chatbots.

Transformer models are crucial in NLP today. They use language modeling and pre-training to solve complex problems. This makes NLP work more efficient and accurate.

Transformer Applications in Translation and Text Generation

Transformers have changed the game in natural language processing (NLP). They excel in tasks like machine translation and text generation. This is because they can model long-range dependencies and capture contextual information.

Models like GPT and T5 have set new standards in language generation. They achieve top results in summarization, question answering, and creative writing. Their versatility makes them a favorite in both research and industry.

In transformers machine translation, these models have made a huge impact. They deliver translations that are almost as good as humans. This has opened up new ways for people to communicate across languages, breaking down barriers.

Transformers are now key in text generation and NLP. As research keeps moving forward, we’ll see even more from these AI tools. They’re shaping the future of AI in language.

Training and Fine-tuning Transformer Models

Training big transformer models from the start is very hard. It needs lots of data and computer power. To solve this, transfer learning and fine-tuning are now key in the transformer world.

Experts use pre-trained models like BERT or GPT. They then fine-tune these models on smaller datasets for specific tasks. This way, they get great results with less data and less computer power. It makes working with transformers easier and more practical for real-world tasks.

Transfer Learning and Fine-tuning

The steps for transfer learning and fine-tuning are:

- Begin with a pre-trained model, like BERT or GPT, trained on big datasets like Wikipedia or BookCorpus.

- Adjust the model for a specific task by fine-tuning it on a smaller dataset. This means adding extra layers and training on the new data.

- Fine-tuning the model boosts its performance on the target task. It needs much less data and computer power than starting from scratch.

Using transfer learning and fine-tuning, experts can use transformers for many NLP tasks. This includes text classification and machine translation. It helps in model optimization and sample efficiency.

Conclusion

Transformer models have changed the game in natural language processing. They’ve made big strides in machine translation, text generation, and understanding language. Their special design uses self-attention to grasp the context, beating old methods and becoming top choices in NLP.

These models can learn from big datasets without being told what to do. Then, they can be fine-tuned for specific tasks. This makes them a go-to for many in the field of language-based AI. As they keep getting better, they’ll be key to natural language processing and AI’s future.

Transformer models are changing how companies work with data and improve customer service. They’re used in many areas, from translating languages to understanding emotions in text. Their role in AI is growing, showing how important they are for the future of tech.