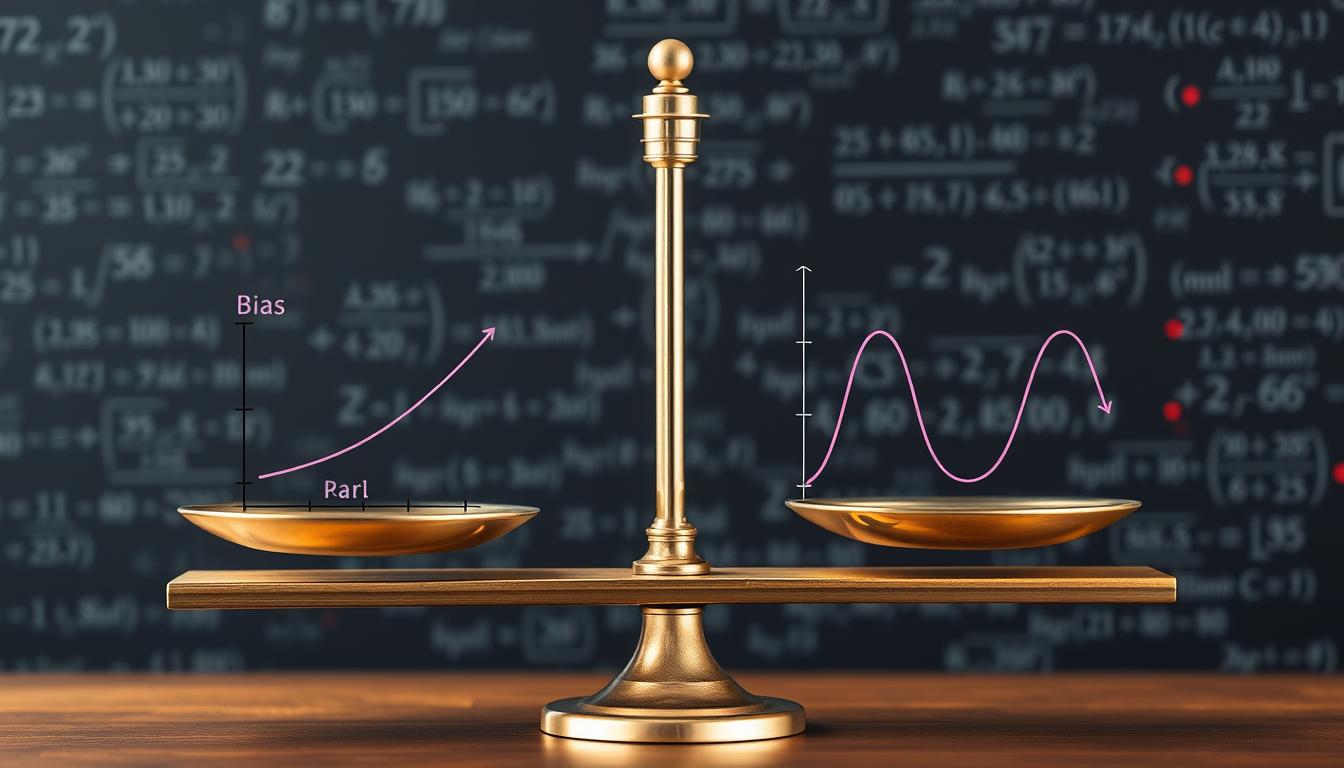

What is the bias-variance tradeoff in machine learning?

The bias-variance tradeoff is a core idea in machine learning. It helps us understand why models make mistakes. By understanding bias and variance, we can find the perfect balance. This leads to models that work well on both training and testing data.

In this article, we’ll explore bias and variance in detail. We’ll also look at how to balance them for better predictive models. This is important in machine learning, data mining, predictive analytics, and pattern recognition.

Understanding Prediction Errors: Bias and Variance

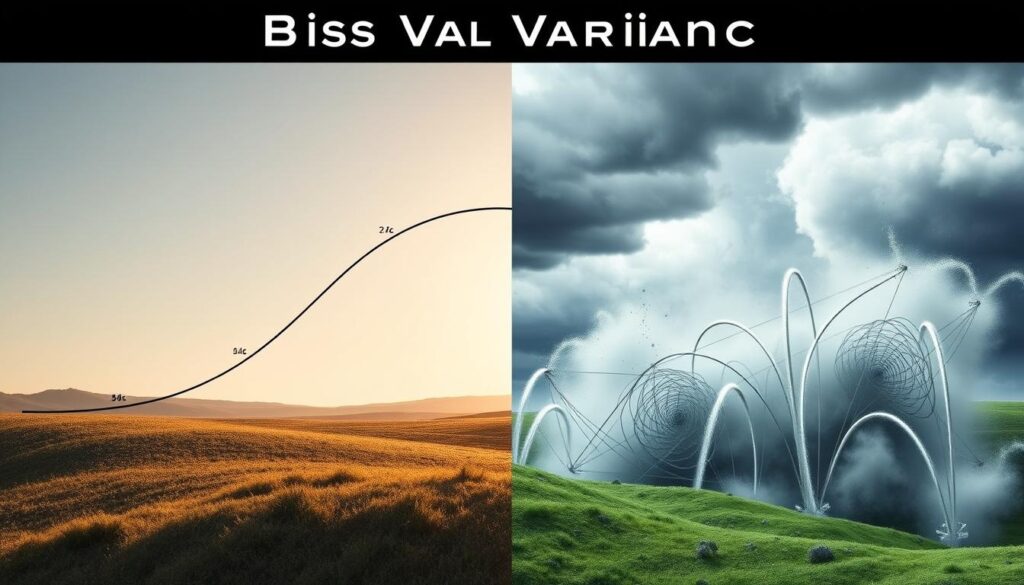

In machine learning, Bias and Variance are key to making models better. Bias is when a model’s average prediction doesn’t match the real value. High bias means the model is too simple, leading to poor performance.

Variance shows how much a model’s predictions vary for the same data point. High variance models are too complex and fit the training data too well. They do great on training data but fail on new data.

What is Bias?

Bias is a systematic error in a model’s predictions. It shows the model can’t find the data’s true patterns. High bias models ignore the training data, leading to high errors on both sets.

What is Variance?

Variance measures how much a model changes with the training data. Models with high variance are too complex and fit the training data too well. They do great on training data but fail on new data.

Finding the right balance between Bias and Variance is crucial. This balance, known as the Bias-Variance Tradeoff, affects a model’s prediction accuracy, model complexity, and generalization ability.

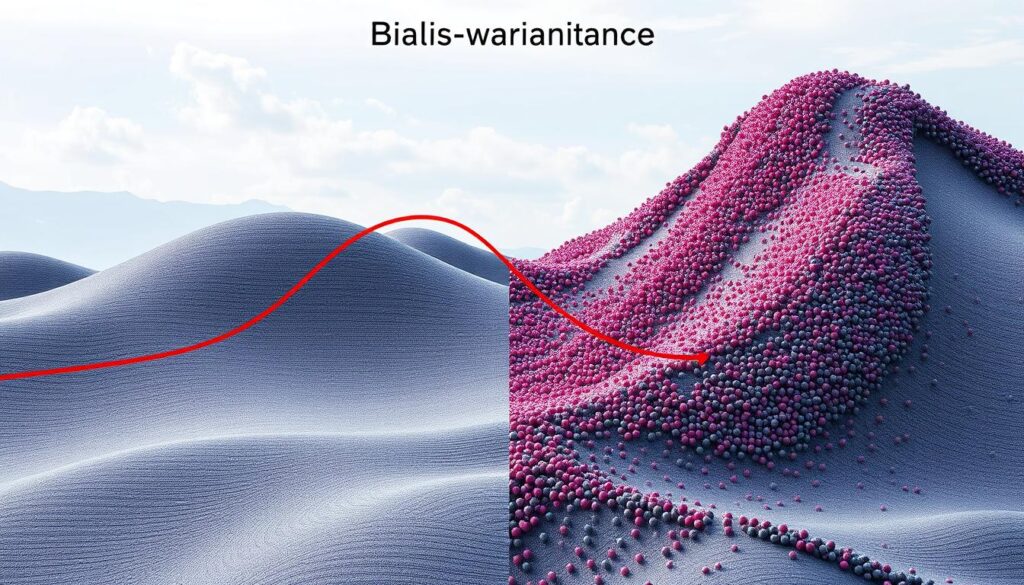

The Bias-Variance Tradeoff Explained

In machine learning, the bias-variance tradeoff is key. It shows the balance between simplicity and complexity in models. This balance affects how well models predict outcomes.

Underfitting: High Bias, Low Variance

Underfitting means a model can’t grasp the data’s true pattern. It’s too simple, with high bias and low variance. This usually happens when there’s not enough data or when using a linear model on non-linear data.

Overfitting: Low Bias, High Variance

On the other hand, overfitting occurs when a model fits the noise in the data too well. It has low bias and high variance. This is common in models with many parameters, like decision trees.

To get the best model, we need to find a middle ground. The model should be complex enough to understand the data but not so complex that it overfits. This balance is crucial for accurate predictions.

Bias-Variance Decomposition of Mean Squared Error

In machine learning, the bias-variance tradeoff is key. It affects how well algorithms learn from data. The bias-variance decomposition helps us understand an algorithm’s expected error. This error is made up of bias, variance, and irreducible error.

The bias shows how much an algorithm deviates from the real function. The variance shows how much it changes with different training data. The irreducible error is the noise in the data that no model can remove.

The formula for the bias-variance decomposition of mean squared error (MSE) is: MSE = Bias² + Variance + Noise. Here, Noise is the irreducible error.

| Metric | Description |

|---|---|

| Bias | The systematic error in the model’s predictions, reflecting how well the model approximates the true underlying function. |

| Variance | The variability of the model’s predictions, reflecting the sensitivity of the model to fluctuations in the training data. |

| Noise | The irreducible error due to inherent randomness or uncertainty in the data, which cannot be eliminated by the model. |

This decomposition helps us see where errors come from in a model. By balancing bias and variance, we can improve our model’s performance and ability to generalize.

Machine Learning and the Bias-Variance Dilemma

In machine learning, the bias-variance tradeoff is a big challenge. It’s hard to lower both bias and variance at the same time. High-variance models fit the training data well but might overfit. High-bias models might miss important data patterns, leading to underfitting.

Balancing Bias and Variance for Optimal Performance

Finding the right balance between bias and variance is key for good predictions. Underfitting means models are too simple and miss data details. Overfitting makes models too complex and prone to noise, failing to generalize well.

To improve model performance, it’s important to manage the bias-variance tradeoff well. This balance is crucial for models to work well on new data, not just the training set.

Choosing the right model is complex because different algorithms have different biases and variances. For instance, linear regression has high bias and low variance. Decision trees have low bias but high variance. Knowing these traits helps pick the best model for a problem.

Understanding the bias-variance tradeoff helps data scientists make better choices. They can select models, engineer features, and use regularization techniques. This way, they can get the most out of their machine learning projects.

Strategies to Reduce Bias and Variance

In machine learning, the bias-variance tradeoff is a big challenge. Data scientists use various strategies to manage both bias and variance. This leads to models that are accurate and work well with new data.

Regularization helps reduce variance. It adds a penalty term to the model’s cost function. This prevents overfitting and improves generalization.

Ensemble Methods also help with the bias-variance tradeoff. By combining models, techniques like Bagging, Boosting, and Stacking improve predictive performance. They also reduce variance.

Feature Engineering is key in managing bias and variance. Choosing the right predictors and removing unnecessary features simplifies the model. This reduces complexity and variance, and improves the model’s ability to find patterns in data.

Increasing the size of the training dataset also helps. More data means the model learns better. This leads to better generalization to new data.

By using these techniques together, data scientists can balance bias and variance. This results in machine learning models that are accurate and robust in the real world.

| Technique | Description | Impact on Bias and Variance |

|---|---|---|

| Regularization | Introduces a penalty term in the model’s cost function to control model complexity | Reduces variance, can slightly increase bias |

| Ensemble Methods | Combines multiple models to leverage their strengths and improve overall predictive performance | Reduces variance, can slightly reduce bias |

| Feature Engineering | Selects the most informative predictors and removes irrelevant or redundant features | Reduces both bias and variance |

| Increasing Training Data | Provides more information for the model to learn from, leading to better generalization | Reduces variance, can slightly reduce bias |

Conclusion

The bias-variance tradeoff is key in machine learning. It shows the need for models that work well on new data. By managing this tradeoff, data scientists can make algorithms better at predicting outcomes.

Getting the right balance in a model is essential. Techniques like feature engineering and regularization help. These methods make models more accurate and reliable. As AI grows, it’s important to watch out for its risks and biases.

The machine learning field needs to be open and collaborative. This way, we can use AI’s benefits while avoiding its downsides. Teaching the next generation about AI is crucial. They need to understand how to use it wisely.