How do support vector machines (SVM) work?

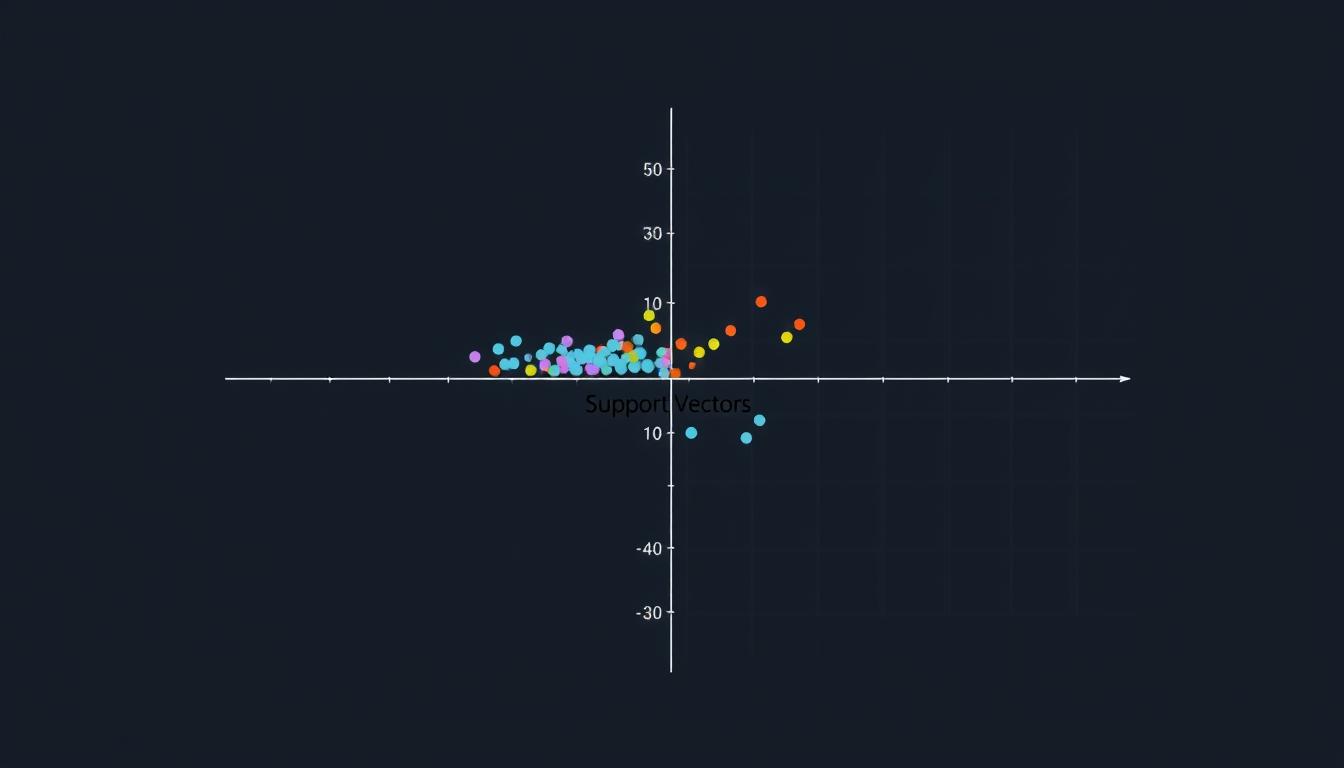

A support vector machine (SVM) is a type of Machine Learning algorithm. It classifies data by finding the best line or hyperplane. This line maximizes the distance between each class in an N-dimensional space.

SVMs were created in the 1990s by Vladimir N. Vapnik and his team. They are used in Artificial Intelligence and Deep Learning to solve classification problems. The algorithm finds the optimal hyperplane that separates the closest data points of opposite classes.

The number of features in the input data decides the type of hyperplane. In a 2-D space, it’s a line. In an n-dimensional space, it’s a plane.

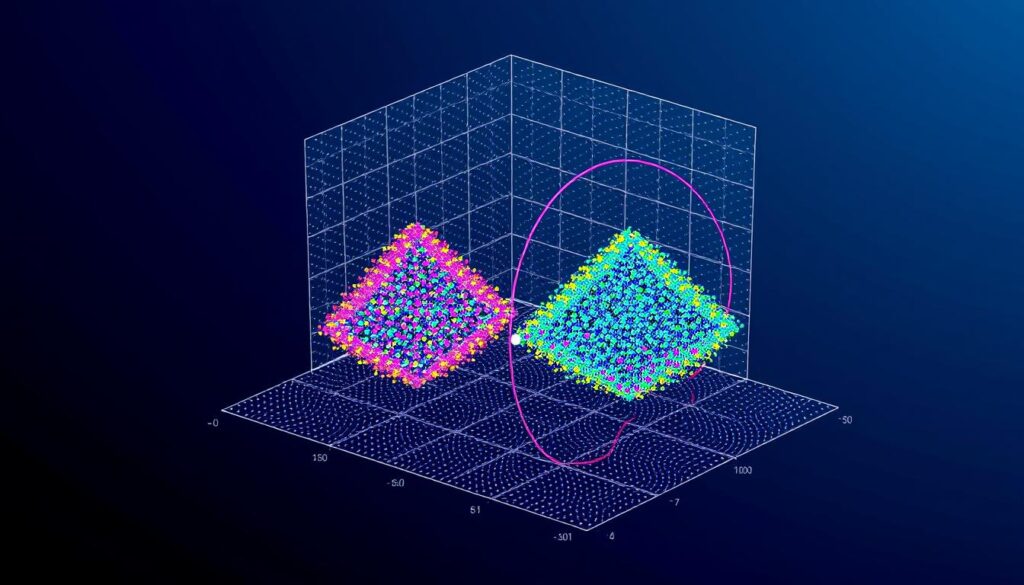

SVMs can handle both simple and complex classification tasks. If the data is not linearly separable, kernel functions are used. These functions transform the data into a higher-dimensional space for linear separation.

This process is called the “Kernel Trick.” It helps SVMs solve complex Predictive Analytics and Data Mining problems. This is especially true in areas like Natural Language Processing, Computer Vision, and Supervised Learning.

Overall, support vector machines are a powerful and versatile Machine Learning algorithm. They have been widely adopted in fields like healthcare, signal processing, and speech & image recognition. This is because they can classify complex data with high accuracy and efficiency.

What are Support Vector Machines?

Support vector machines (SVMs) are a type of supervised machine learning. They are used for both classification and regression tasks. Developed in the 1990s by Vladimir N. Vapnik and his team, SVMs find the best hyperplane to separate data points into classes.

This hyperplane is the decision boundary. It maximizes the margin between the closest data points of different classes.

Linear SVMs

Linear SVMs are for data that can be separated by a straight line. The decision boundary and support vectors look like a “street” that fits the widest margin between classes. There are two ways to calculate this margin.

Hard-margin classification separates data points perfectly outside of the support vectors. Soft-margin classification allows for some misclassification. It uses slack variables for this.

| Kernel Function | Description |

|---|---|

| Polynomial Kernel | Allows for nonlinear decision boundaries by mapping data into a higher dimensional space. |

| Radial Basis Function (RBF) Kernel | Measures the similarity between data points based on their Euclidean distance, enabling nonlinear classification. |

| Sigmoid Kernel | Resembles a neural network activation function, allowing for nonlinear boundaries. |

Different kernel functions can be used for nonlinear SVMs. These include the Polynomial kernel, Radial Basis Function (RBF) kernel, and Sigmoid kernel. These functions map the original data into a higher-dimensional space.

How SVMs Work

Support Vector Machines (SVMs) are a key tool in machine learning. They’re used for both classifying and predicting data. Their strength comes from the kernel trick, which lets them tackle nonlinear data.

The kernel trick changes the data into a higher space. This is done with kernel functions like the polynomial, RBF, or sigmoid kernel. These functions help SVMs work in this new space without changing the data itself.

This trick reduces the problem’s dimensionality. It helps avoid overfitting and makes calculations easier. SVMs can then find the best line to split the data, even if it’s not straightforward.

| Kernel Function | Formula | Characteristics |

|---|---|---|

| Polynomial Kernel | K(x, y) = (x·y + c)^d | Captures higher-order interactions between features |

| RBF Kernel | K(x, y) = exp(-γ||x-y||^2) | Measures the similarity between two points in the feature space |

| Sigmoid Kernel | K(x, y) = tanh(κx·y + c) | Behaves similarly to a neural network activation function |

With the kernel trick, SVMs can tackle Kernel Functions, Nonlinear SVMs, and Dimensionality issues. They also reduce the chance of Overfitting. This makes SVMs a valuable asset in machine learning.

Machine Learning with SVMs

Support Vector Machines (SVMs) are a key part of Machine Learning. They are great at working with complex data. This makes them a top pick for many Supervised Learning tasks.

To create an SVM classifier, we first split our data. Then, we import the SVM module and train it on our data. We check how well it does by comparing its predictions to the real values. We also use Evaluation Metrics like F1-score and precision.

We can tweak the model by adjusting things like the kernel function and regularization. This helps improve its performance. SVMs often beat other models like Naive Bayes and decision trees. But, they can be more expensive to run.

Support vector machines are a powerful tool in Machine Learning. They help solve many Supervised Learning problems with great results.

Applications of SVMs

Support Vector Machines (SVMs) are used in many fields. They are great at solving complex problems. This includes tasks in natural language processing, computer vision, and bioinformatics.

In text classification, SVMs are top-notch. They’re good at analyzing sentiment, detecting spam, and modeling topics. They can handle big data and find complex patterns. For example, they can spot different skin lesions with up to 95% accuracy.

In image classification, SVMs are key players. They help with object detection, image retrieval, and facial recognition. Facebook uses them for facial recognition. They also excel in finding credit card fraud by spotting small patterns in data.

SVMs are also used in bioinformatics. They help classify proteins, analyze gene expressions, and diagnose diseases. They’re great at finding hidden trends in biological data. Plus, they’re useful in geographic information systems for analyzing soil and predicting seismic liquefaction.

| Application Domain | SVM Performance |

|---|---|

| Text Classification | Up to 95% accuracy in skin lesion classification |

| Image Classification | Utilized in Facebook’s facial recognition feature |

| Credit Card Fraud Detection | Effective in capturing subtle patterns in transaction data |

| Bioinformatics | Applied in protein classification, gene expression analysis, and disease diagnosis |

| Geographic Information Systems | Analyze geophysical structures and predict seismic liquefaction potential |

SVMs are very versatile. They can tackle many different tasks. This makes them a valuable tool in machine learning and data analysis.

Conclusion

Support Vector Machines (SVMs) are a key tool in machine learning. They can solve both classification and regression problems. Their strength lies in finding the best line that separates different data points.

This makes them useful in many fields like natural language processing and computer vision. They are also used in bioinformatics and geographic information systems.

The kernel trick helps SVMs deal with complex data. It turns hard data into something easier to work with. This trick, along with their ability to avoid overfitting, makes SVMs very valuable.

As AI research grows, it’s important to make sure our findings are reliable. A recent article in Nature Computational Science by Ananya Rastogi highlights this need.

It’s vital for AI researchers to share their work clearly. Governments should also see the value in AI and support its growth. This way, AI can help solve big problems and drive new ideas.